Introduction

In the last two decades, there have been massive investments in the development in the use of technology for learning in institutions. This has been motivated by the need to develop teaching and learning processes through the use of technology. A traditional teaching approach involves face to face sessions, static paper based with the teaching staff in the front of the classroom, and using either whiteboard or projector slides as a means of display for teaching material. However, a smart classroom consists of a physically built room equipped with sensors that capture audio-visual information such as human motion, utterance and gesture, and computer equipment used by the teacher to lecture to class room participants that include both face to face participants and remote students. A smart classroom plays a key role in maximising students’ potential and their cognitive retention by supporting various learning and teaching methods, such as student-centered learning methods (Bouslama & Kalota 2013). Moreover, it can entertain students through its visualisation capability, by providing highly engaging visuals and animations that make the learning experience more enjoyable for both the teachers and the students. These will improve students’ overall academic performance (Jena 2013).

Smart classroom learning is designed to address the demand of a wide range of learning environment. It comprises clever pedagogies and content, interactive learning methodologies as well as sophisticated equipments that create a complex system characterised by many connections and various segments. An interactive classroom design involves multiple complexities and the complete list of parts and connections may be or may not be possible. Thus, the creation of an innovative and active learning environment to achieve goals requires a concerted effort by all the stakeholders. This can be achieved by applying effective design and planning (Kossiakoff et al. 2011).

There has been significant progress in the use of haptic technology in various sectors especially for training purposes. Haptic controllers give potential users as well as developers the capability to interact with systems using different interactive processes that allow better integration between human machines interfacing. These devices have their own benefits as well as individual limitations. The limitations include sensing distance, accuracy of data as well as production of different data types and software libraries. However, because of the implementation demand, these sensors and actuators for a smart classroom environment call for a need for a middleware service. This service would encapsulate all the underlying hardware to create a new workflow that boosts interactivity in augmented environment.

Middleware is software located between heterogeneous systems, applications and devices upon network operating system. It conceals the complexity of individual devices and simplifies the implementation process for software engineers (Puder, Römer & Pilhofer 2006). Therefore, the middleware based software architecture represents the genuine infrastructure which connects to devices and applications, and provides a level of transparency as the main objectives of a middleware platform (Puder, Römer & Pilhofer 2006).

The paper aims to improve the teaching approach towards an automated control system by presenting analysis and development of a haptic middleware system. The system is aimed at replacing traditional system inputs, such as keyboards and mouse, by integrating haptic motion control middleware systems to simplify the overall interaction process within a smart classroom context. Moreover, the paper shows the background of the perceived smart classroom environment and the information that identifies a set of requirements for software architectural design process for the middleware system. A prototype is then created based on the architectural design to show proof of concept for the middleware service. The results would then be reviewed and analysed to the smart classroom context based on the findings. The introduction and background of the smart learning environment is discussed in the first section. The second section provides an analysis of the proposed system for smart learning environment design and analyses its potential. The third section discuses system design and requirements. The fourth section discuses the future development and the fifth section provide an experimental analysis for the new learning system design.

Background

Smart Pervasive Learning Spaces

The smart classroom strategy has been applied recently in teaching and learning models. The focus of the application of a smart classroom has been on teaching and learning, by providing a greater range of complementary and aligned services to support learners in a digital environment. Scholars are in agreement on the benefits derived from smart devices in education. Thus, the recent rise in demand for the integration and collaboration of smart equipment devices in the learning environment has been the force behind the innovation of the 21st century classroom (Foundation 2010). The objective of the smart classroom, virtual classroom and other ICT applications is to replace the traditional classroom with pervasive, seamless and integrative learning experience. For this reason, there is a need to design a system architecture platform that can enhance and tailor technologies for better communication and collaborative modes.

In recent years, designers have begun to consider the increasing demand of a physical simulation environment that works by sustaining the interactivity among learners. Some studies have shown an immersive simulation environment that involves large interactive displays in classes for collective inquiry from students based on their experiences and observations. Compared to the traditional inquiry-based system, the new spaces offer new types of learning experiences that serve to engage students with science concepts that have traditionally been taught through more passive or less advanced forms of interaction (Lui et al. 2014). In this respect, learners are turned into active participants because of the surrounding physical environment, which serves to motivate learners to think, reflect and drive their own understanding (Price & Rogers 2004).

The architecture system design should focus on users’ needs in education sector by harnessing the learning and business potentials of ICT. The main goal is to develop a system that helps users to understand in depth concepts, achieve scientific reasoning, enhance collaborative and critical thinking skills, and improve communication skills (Foundation 2010).

Recent Smart Classroom Approaches and their Properties

One of the infrastructures for the smart classroom that has been developed recently is called the Scalable Architecture for Interactive Learning or SAIL. SAIL utilises learning analytic techniques to enable physical learning interactive environments within the user’s respective spatial positions (Slotta, Tissenbaum & Lui 2013). In addition, the interactive learning is supported by real-time data mining and intelligent agents. In this method, the awareness of its occupants include the identity of the people in the room, the activities each person handles at specific times, the learning tasks to be completed and the learning models adopted.

Good understanding of system architecture is essential for the development of an effective system. For that reason, SAIL underlines variant layers, each with different functionalities for different purposes in the system architecture. The layers include content and student management layers, messaging server architecture design used to connect devices and software agents in real-time, a visualisation layer for displaying materials and learner’s work, and an intelligent agents’ layer utilised in complex activities. Based on this system architecture, it can be observed that the communication and visualisation layers are the vital layers in the production of services to the users. However, the interactions need to be enhanced through the use of smart middleware that provides the required services in the system.

Augmented Physical Environment (APE)

An Augmented Physical Environment (APE) is able to engage users in brilliant transformative ways. Students in the advanced smart classroom are more willing to explore opportunities in a novel digitally enhanced physical space. The augmented spaces can adopt a variety of learning methods. A study shows that learners would be able to collect, observe and explore data from the equipped augmented setting through visualisation displays and a variety of personal gadgets (Lui et al. 2014). As a result, collective information and various visualisations generated from the ambient environment can improve users’ experience and allow for more engaging teaching and learning methods.

Augmented physical environments have shown a positive impact on facilitating creativity and reflection. It has been shown that tailoring advanced technologies and their techniques in education promote active service learning by enhancing the learner’s perceptions of the physical activities and information presented, bridging various perspectives between physical and digital atmospheres. This results in real-time experiences and engagement experience for students via juxtaposition of actions with augmented outcomes (Price & Rogers 2004). Users’ comments are responded to in a real-time setting involving visualisations. The response serves to capture and aggregate user’s observation for knowledge building and discourse (Lui et al. 2014). The novel system must complement and define such immersive digital environments, where users respond to the ambient class effectively.

Attempts have been made to construct a physical immersive classroom that explores collective inquiry and collaborative inquiry methods. The collective inquiry method has been deployed into the entire classroom that focuses on interaction design (Lui et al. 2014). In contrast, collaborative inquiry tends to focus on group interactions. Studies show that learners, in collective inquiry, are capable of thinking deeply and developing their own understanding, but with an emphasis on individual progress contributions (Lui et al. 2014). Subsequently, there is a demand to leverage more natural interaction forms afforded by smart space for the provision of collaboration and authenticity of learning experience.

Applied Physical Digital Spaces for Learning (PDS)

Physical spaces have been introduced as an attempt to create the meaningful Smart physical or intelligent physical interactive spaces. The use of pervasive, non-desktop technologies have been argued in the pioneering work of Wilensky and Resnick (1999) to support and engage learners within a physical equipment environment. In addition, the authors indicated that much of the prior work is based on them as well, such as using embedded systems and ubiquitous computational mediums for enhancement. A participatory simulation concept introduces users or learners as elements of the simulation (Colella 2000). For instance, an examiner gave users a transmitting wearable gadget, by creating various carriers with the aim of greeting a large number of classmates without getting infected (Colella 2000). Another attempt of the physical interactive learning space (PILS) called Hunting of the Snark (Rogers & Muller 2006) used mobile technologies in the classroom to enhance the reality of the environment.

Embedded technologies also have taken part in presenting the interactive classroom into the learning system. By mapping a persistent scientific simulation onto the walls or floors of the room in the form of a location specific computer, the Embedded Phenomena framework leverages the physicality of the classroom (Moher 2006).

Another module of a digitally augmented reality of teaching and learning environment is called SMALLab, a room equipped with digitally enhanced floors, walls and interactive digital space. This type of smart space has been initiated to support the reality of geologic processes and geological events. The learners are able to use various input devices to study geological aspects through collaborative construction and monitoring of the earth’s crust by studying erosion and uplifts over time (Birchfield & Megowan-Romanowicz 2009).

The above illustrations show the potential of innovative technologies for education processes once they are implemented. On the other hand, it is necessary to take into account and understand how computationally augmented physical environments can contribute to satisfy the users with their technological demands in the learning classes based on the architectural design.

The Proposed Smart Learning Environment Design and Analysis Prospective

The smart classroom relates to the optimisation of the interaction between the teacher and the learner, the development of teaching presentation, accessibility of learning and teaching resources, detection and awareness of the context and classroom management. The overall vision of an intelligent classroom would include a collaboration of sensors, passive computational devices and lower power networks which provide infrastructure for a context-aware smart classroom that senses and responds to the ongoing human activities.

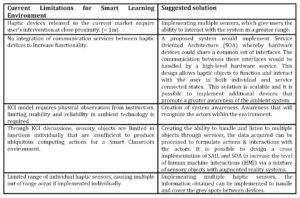

The Conceptual View of the Smart Learning (see Figure 1) requires development in areas like distributed computing, networking, acquisition of sensor data, speech recognition systems, signal processing and human identification (Sailor 2009). Based on the Conceptual View, the new system can be accessed in real-time enabling interaction between the teacher and the learners through media tools. The interactive equipment is interfaced with simple, clear navigation, depending on operation characteristics, voice, touch and visual interaction which improve Human Machine Interaction (HMI). The hardware in the future generation classroom meets the interactive requirements of the multi- terminal points.

Figure 1: Conceptual View of Smart Learning Environment

In a multi-terminal environment, a single active class can be delivered in multiple classrooms that are separated geographically. The smart classroom can also record and store basic data in the computer or cloud environment in order to assist in the decision making of participants, and possibly to aid in the self-organisation of the class (Harrison 2013; Sobh & Elleithy 2013). Moreover, the new idea of the system architecture is to construct and design a robust and scalable middleware system that supports the level of interactions required within the context of the smart classroom that involves the following:

• Smart classroom applications represent high level implementations of the smart classroom logic through utilising the middleware framework within a cloud environment.

• Smart middleware framework interconnects and allows for collaboration among a range of peripherals via IOT framework. Such a framework comprises an implementation of a common library that defines services between these devices. It also represents the underlying connectivity and data distribution bus that interlinks various hardware and software components and includes critical services for arbitration and haptic ranging. This component will be equipped with different APIs for machine vision, actuators and sensor devices (Augusto, Nakashima & Aghajan 2010).

Moreover, the system includes the capturing of important information services in a classroom to achieve an increased level of interaction, a dynamic learning environment and effective delivery of teaching contents. The information is sensed through systematically arranged microphones and tracking cameras. The sensing quality mainly depends on the performance of the audio and video devices, appropriate positioning of devices etc. (Harrison 2013; Sobh & Elleithy 2013). Attentive teaching can further be enhanced through context-awareness sensing, which extracts behavioural information in the classroom.

Haptic devices and sensors installed in convenient places in the classroom can automatically detect parameters, such as noise, temperature, odour, light and others, as well as adjust lamps, air conditioning so as to maintain temperature, light, sound and fresh air which are suitable for mental and physical status in smart classrooms (Augusto, Nakashima & Aghajan 2010).

System Analysis and Design

Requirements Analysis

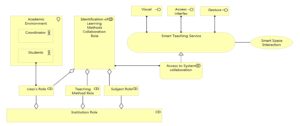

A Haptic Middleware system for a Smart Learning Environment is one that has the capability of passively and actively observing all objects and actors, and is able to react to events in the environment (see Figure 2).

Figure 2: Middleware Vision

An example will be that if the system senses that there are no students or teachers in a room, the system would turn off the room’s lights. The following table shows the requirement of the suggested middleware to address some current limitations of smart learning environment.

Table 1: General Requirements of Middleware

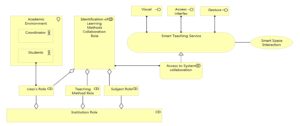

Business modelling

The model illustrates a business layer (Figure 3) reflecting an overall view of the system components to fulfil the business needs of users and meet the main object of system function. This provides an overall insight of the perspective HMI and MMI to enhance the meaning of objects interaction in certain ambient spaces.

The model shows the collaboration of roles in the learning and teaching process to provide a useful role in the assigned system. Hence, business interaction is assigned to the collaboration role as a gate to access the reference system. On the other hand, the model also shows the provided service that is used by business interaction. The teaching progress service composes three facilitations, which are the Dialog process, Human-Machine-Interaction and Machine-Machine-Interaction. Those provisions result in association and access relationships that utilise the visualisation, gesture and voice recognition interactions. The services can be accessed and controlled through the business interface by the end-users.

The developed haptic middleware prototype will be focused on the smart teaching business services where the Human-Machine-Interaction and Machine-Machine-Interaction will be improved upon utilising visual and gesture interactions as a proof of concept. The system implemented will address some of the current limitations that exist within the Smart Learning Environment context.

System Scope

The middleware system in the scope of this project will be a special layer which predefines and recognises a set of haptic movements and signalling. Implementation of the middleware system will highly assist the project by binding individual haptic sensors into an umbrella of set functionalities and sensory recognition library. This is for the purpose of Smart Environment customization where multiple haptic objects can be easily integrated into the middleware to obtain a better dataset for processing.

All individual haptic services will be integrated into a central server or network service that can be communicated by the main middleware service. The implementation of the Internet of Things (IoT) will be considered by this project as very valuable; however, at this stage, it will not be entered as the scope of this project.

The scope of implementing a haptic sensory device will utilise all skeletal data from cameras for preliminary detection of users within the Smart Learning Environment. Gestures will be focused and defined on the right hand of the user for interactions with the middleware system.

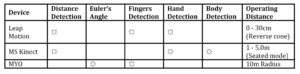

Close range haptic sensors are considered to sense close range haptic signals from the hands and fingers, and long-range haptic sensors to sense body structural motions. Mid-range sensors will be selected and analysed to cover the grey area between the close and long range sensors.

Development Environment

Hardware equipment

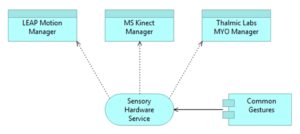

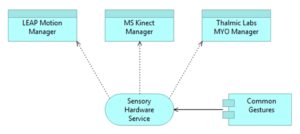

Multiple haptic controllers have been researched and analysed for the haptic middleware environment. Three controllers were acquired by the project team based upon their suitability to this project (see figure 4).

Figure 4: LEAP Motion Haptics Controller and Thalmic Lab MYO Gesture Armband and Microsoft Kinect Camera (Xbox)

• Leap Motion

The Leap motion device is a haptic sensor that contains an infrared camera, which has a reversed pyramid viewpoint of the environment. The range of this device is approximately up to 30 to 40 cm radius indicating that it can only sense close range hand gesture control interpretations for the system.

• Thalmic Lab MYO

This haptic controller is a gesture control armband that senses bio-electrical activity in the muscles that control the fingers of the hand. The armband can be communicated with the workstation clients via Bluetooth connection, which then interprets the gesture on motion signals via the software library provided.

• Microsoft Kinect Camera

Microsoft Kinect Camera (Xbox 360) is a motion sensing input device which contains a one camera and an infrared camera. This device is developed to work with the Microsoft Kinect software development kit in C++, C# or Visual Basic .Net. The sensing distance for the sensor is approximately 0.8 to 3.5 metres and providing help would be viewable at a frame rate of approximately 9 Hz to 30 Hz depending on resolution. The devices selected for the middleware system development use infrared sensors, as well as, Bluetooth, thus the systems are able to interact with actors within normal to low light conditions, which in this context is highly suitable for a Smart classroom environment.

Architectural Analysis

Architectural patterns were analysed and reviewed to meet the design of the middleware system to fit to the Smart Learning Environment context. A high level design is developed using ArchiMate and it will be further which will then be decomposed into a lower level design using UML, which determines the functionalities of each component block.

Service Oriented Architecture (SOA) is made up from a collection of discrete software modules, known as services; these services collectively provide the complete functionality of a large software application. The SOA approach allows users to combine and reuse them in the production of applications. Services may be implemented using traditional languages like Java, C#, C/C++, Visual Basic, PHP or even COBOL. The prototype described in this paper was developed C#. Service oriented architecture would be beneficial for the haptic control middleware as the middleware layer will need to communicate with other layers and be able to extend further as the system capabilities are enhanced. On the other hand service-oriented communication has its toll on the time performance of the system as it is possible to have large chunks of data on different devices to be transmitted to the middleware and at the same time, compute and interpret before output to another system. This may cause the performance to be significantly degraded.

Figure 5: High level Design (Sensory Hardware Service)

Architectural Design

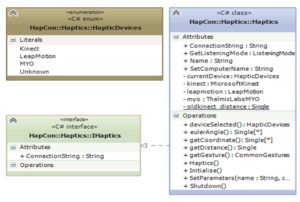

One of the areas to be considered in architectural design is the means of managing haptic objects within the middleware system. The number of haptic objects may change in type or number hence the middleware system must have measures in place to handle a common set of functionality in which the devices can easily communicate in order to improve Machine-Machine-Interaction within a Smart Learning Environment.

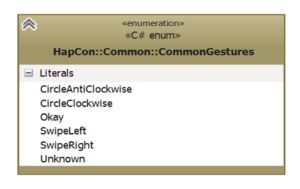

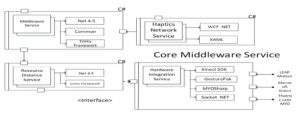

Sensory Hardware service (see Figure 5) defines a common set of functions and data requirements for any device. It also separately contains a common gestures library which defines a set of common actions and gestures available for user interaction. For example, when a user swipes left using the right hand in front of the Microsoft Kinect Camera, it will update the sensory hardware services that a gesture has occurred and verify against the common gesture library for confirmation for middleware interpretations. This service is managed by the Core Middleware service (see Figure 6).

Distance Resource service (see Figure 6) handles the resource selection logic based on the output from the sensory hardware service. This is achieved by assessing the distance of the user, the velocity as well as the confidence ratio of the device. Within the current scope, this service is used to determine the location and distance of the user in order to produce a better algorithm in system awareness of locating active user interactions.

Core Middleware service (see Figure 6) is the main service which is used to implement the haptic control middleware. This is the service point, which is used for other third party systems or a direct user interface plugin to interface and interact. Haptic UI interface (Figure 6) represents a user interface that can interact with the middleware system. In the Smart Learning environment, the core middleware service would utilise a number of sensors or integrate with interactive boards or any nodes that connect to the IoT peripherals.

Device Configuration service (see Figure 6) is a Jason (XML) configuration of all devices that are defined. This will contain the name of the device, the connectivity method as well as some basic capabilities of the item. This is a network service, which outputs gestures data out to the network space. This is aimed at interacting systems across the internet using haptic controllers on the workstation.

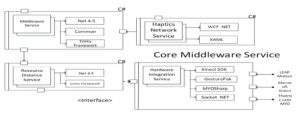

Implementation Architecture

The middleware framework is implemented and displayed utilising a Service Oriented Architecture, which provides technical implementation and integration between the hardware, software and the architectural designs based on the business process defined within the business modelling of the Smart Learning Environment. The implementation architecture provides an overview of that assists in the actual system design and interaction between each layer. The middleware is defined into two main service layers: Core Middleware Service Layer and Lower Configuration Layer.

Figure 6: High level Design (Core Middleware Service)

• Core Middleware Service Layer

The Core Middleware Service (see Figure 6) implements the haptic controller framework. The service is composed of four main services including:

• Middleware Service: the outbound service which implements the middleware API for third party systems or user interfaces.

• Haptics Network Service: a network service which outputs gesture data across the internet via TCP/IP or HTTP endpoints. This utilises Windows Communication Foundation framework. This service is used to interface with IoT nodes and peripherals.

• Resource Distance Service: the resource distance service which controls the logic in switching hardware devices based on availability and other parameters such as distance and velocity for improved system awareness.

• Hardware Integration Service; which handles all the devices connected to the middleware system. These devices are implemented using their corresponding libraries and use a common interface for ease of interaction.

Figure 7: Implementation Architecture (Core Middleware Service Layer)

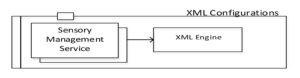

Lower Configuration Layer

The Lower Configuration Layer (see Figure 8) implements the haptic configuration service from the high level design. This layer implements an XML engine to create and parse hardware configuration data. For example, information of the haptic devices stored will include the name of the device, the connection type of the haptic object (i.e. Bluetooth, TCP/IP, USB or Serial) as well as any additional configuration data relevant for the middleware system.

Figure 8: Implementation Architecture (Lower Configuration Layer)

Figure 9: Low Level Design (Haptics Middleware API)

Figure 10: Low Level Design (Haptics Middleware API)

Prototype Development

The prototype of the haptic middleware system (Figure 11) allows developers to utilise different haptic devices to interact with the system using each of the haptic controller’s strengths to cover for the other haptic device’s weaknesses (Table 3). The framework would provide useful data such as gesture interactions, user or hand distances, and velocity of human motion as well as general skeleton statistics. In consideration of the above, a functional prototype is to be developed to demonstrate:

• Ability to integrate multiple peripherals and sensors into the framework.

• Ability for the sensors to interact based on a common gesture library.

• Ability for the middleware to sense user distance and velocity and calculate as a factor to determine the next listening haptic controller.

Table 2: Haptic controllers complementing their strengths over other controller’s weaknesses

Final System

A working prototype (see Figure 11) of the Haptic Middleware software was designed and developed to allow for identification, management and visualisation of data received from several types of haptic hardware for which these specialised services were implemented. Initially, the requirements were to have the capability of sensing and servicing the users within 10 meters range.

Figure 11: Final prototype for Haptic Middleware system

Future Development

There are various opportunities for the development of haptic middleware system in the current learning systems. The system can leverage on the existing computers, but additional devices can be added to increase the capability. Various devices such as speech recognition system can be installed especially in the library section. Further network service can be integrated into the cloud environment, with the ability to connect IoT peripherals. The smart algorithms for a device switching process should also be improved so as to capture various requirements of the teachers and the students. There should also be more consideration on the characteristics and behaviour of users by checking the design of learning space for adoption.

Experimental Analysis

The new approach makes teaching and learning more effective compared to the traditional system. The new prototype demonstrates a highly interactive classroom that will enhance the students’ interest and attention in learning. It stimulates the curiosity in learning, which is one of the factors that contribute to learning. The objective here is to create teaching and learning space that promote knowledge and application to real life – where learning objectives are realised such as the students’ qualities and abilities, and the course learning outcomes. In addition, physical arrangement enables the teacher to know about the status of each student in order to adjust the learning activities to suit the learners, which leads to an improvement of academics’ performance and their outcomes. The new system design also supports variant teaching and learning approaches, and is more engaging, student-centered, interactive, reflective and promotes collaboration (Yang & Huang 2015).

Conclusion

The current design practices in learning environment will need to be changed to support the evolving pedagogical techniques and to meet the students’ expectations. This study has explored a new system architectural designs that create opportunities for innovative technologies for the education process, focusing on designing for a collaborative smart interactive learning space. The new approach has shown how haptic technologies can be integrated into the architecture of the smart learning environment, by designing components of service oriented software middleware, which can be achieved through proper architectural development and design. As result, the functional prototype shows an innovative way of utilising haptic devices within a Smart Learning Environment context. Nevertheless, there are areas which can be improved to enhance usability, but this middleware will be the first that enables this new ability on multiple gesture devices handling to control software systems.

(adsbygoogle = window.adsbygoogle || []).push({});

References

1. Augusto, J.C., Nakashima, H. & Aghajan, H. (2010), ‘Ambient intelligence and smart environments: A state of the art‘, Handbook of Ambient Intelligence and Smart Environments, Springer, pp. 3-31.

Publisher – Google Scholar

2. Birchfield, D. & Megowan-Romanowicz, C. (2009), ‘Earth science learning in SMALLab: A design experiment for mixed reality‘, International Journal of Computer-Supported Collaborative Learning, vol. 4, no. 4, pp. 403-21.

Publisher – Google Scholar

3. Bouslama, F. & Kalota, F. (2013), ‘Creating smart classrooms to benefit from innovative technologies and learning space design’, Current Trends in Information Technology (CTIT), 2013 International Conference on, IEEE, pp. 102-6.

Publisher – Google Scholar

4. Colella, V. (2000), ‘Participatory simulations: Building collaborative understanding through immersive dynamic modeling’, The journal of the Learning Sciences, vol. 9, no. 4, pp. 471-500.

Publisher – Google Scholar

5. Foundation, N.S. (2010), Preparing the next generation of STEM innovators: Identifying and developing our nation’s human capital, National Science Foundation.

6. Harrison, R. (2013), TOGAF® 9 Foundation Study Guide, Van Haren.

Google Scholar

7. Jena, P.C. (2013), ‘Effect of Smart Classroom Learning Environment on Academic Achievement of Rural High Achievers and Low Achievers in Science‘, International Letters of Social and Humanistic Sciences, no. 03, pp. 1-9.

Publisher – Google Scholar

8. Kossiakoff, A., Sweet, W.N., Seymour, S. & Biemer, S.M. (2011), Systems engineering principles and practice, vol. 83, John Wiley & Sons.

Publisher – Google Scholar

9. Lui, M., Kuhn, A.C., Acosta, A., Quintana, C. & Slotta, J.D. (2014), ‘Supporting learners in collecting and exploring data from immersive simulations in collective inquiry’, Proceedings of the 32nd annual ACM conference on Human factors in computing systems, ACM, pp. 2103-12.

Publisher – Google Scholar

10. Moher, T. (2006), ‘Embedded phenomena: supporting science learning with classroom-sized distributed simulations’, Proceedings of the SIGCHI conference on Human Factors in computing systems, ACM, pp. 691-700.

Publisher – Google Scholar

11. Price, S. & Rogers, Y. (2004), ‘Let’s get physical: the learning benefits of interacting in digitally augmented physical spaces’, Computers & Education, vol. 43, no. 1, pp. 137-51.

Publisher – Google Scholar

12. Puder, A., Römer, K. & Pilhofer, F. 2006, Distributed systems architecture: a middleware approach, Elsevier.

Google Scholar

13. Rogers, Y. & Muller, H. (2006), ‘A framework for designing sensor-based interactions to promote exploration and reflection in play’, International Journal of Human-Computer Studies, vol. 64, no. 1, pp. 1-14.

Publisher – Google Scholar

14. Sailor, W. (2009), Making RTI work: How smart schools are reforming education through schoolwide response-to-intervention, John Wiley & Sons.

Google Scholar

15. Slotta, J.D., Tissenbaum, M. & Lui, M. 2013, ‘Orchestrating of complex inquiry: Three roles for learning analytics in a smart classroom infrastructure’, Proceedings of the Third International Conference on Learning Analytics and Knowledge, ACM, pp. 270-4.

Publisher – Google Scholar

16. Sobh, T.M. & Elleithy, K. (2013), Emerging Trends in Computing, Informatics, Systems Sciences, and Engineering, Springer.

Google Scholar

17. Yang, J. & Huang, R. (2015), ‘Development and validation of a scale for evaluating technology-rich classroom environment’, Journal of Computers in Education, pp. 1-18.

Publisher – Google Scholar