Introduction

For decades, the role of information technology (IT) in organizations has been stressed in the Information Technology/Information System (IT/IS) literature. The last decade has witnessed a growing flux of commercial operations characterized by an increased use of IT. The technological transformation has equally affected the way firms manage their businesses. Today, ITs are often perceived as a competitive edge that strengthens the firm’s capacity to stand against environmental risks (Cotton and Bracefield, 2000; Huerta and Sanchez, 1999; Lubbe and Remenvi, 1999). Increasing spending on IT (Remenvi and Sherwood-Smith, 1999; El-Imad and Tang, 2001, Irani and Love, 2002) and their risky use in organizations lead managers to be aware of the importance of ITs for the survival of firms and the realization of any competitive edge (Feeny and Ives, 1997).

Most studies confirm the major interest of the decision making process in investing in IT (Sharif and Irani, 1999; Farbey et al., 1999; Kettinger and Lee, 1995). The adoption of ICT is often seen in the literature of the field as essential, even though it is lengthy, costly, complex and risky. Investment planning and coordination task are therefore crucial for any results to be achieved.

Yet, studies have shown that there is a difficulty in carrying out investment projects in IT. It is either related to the duration or to the budget fixed for the investment. For instance, in his study conducted on 100 firms, Gordon (1999) found that only 37% of the sample firms have accomplished their IT investment project in due time, and 42% have not gone beyond the budget fixed for the investment. In a more recent study, El-Imad and Tang (2001) have shown that only 40% of the 310 firms investigated were able to achieve their investment project in ICT on time.

As technology has become an integral part of a firm’s construct, the choice of the appropriate technology according to the business requirements has become more important than ever. Because investment results can have repercussions on the firm’s conception, on the work processes and on the future economic prosperity, the investment decision remains one of the most important activities of firms (Doherty and King, 2005).

As a consequence, over time, the ability to determine the value of IT with certitude has led, to the development, by researchers, of a great deal of theories, frameworks and techniques related to the evaluation of investments in IT/IS. With such diversity in the methods that are available, investing in IT should become a less and less daunting task. However, the literature suggests that only a few of these methods are actually used. Hence, judicious decision taking and evaluation practice related to investing in IT are rarely really followed (Farbey et al., 1999; Lubbe and Remenyi, 1999).

This research paper emphasizes the whole decision making process, from analysis and planning to post-implementation evaluation of IT. It also aims at investigating the applicability and feasibility level of the theories and techniques that are pertinent in investments in IT, and the evaluation decisions, as observed in the Tunisian firms.

In the following part, we first present the decision taking generic process that is composed of four steps, i.e. analysis and evaluation, evaluation of cost and benefits, selection, implementation and post-implementation evaluation. We then present an evaluation of investments in IT through the evaluation approaches; namely, the economic approach, the strategic approach, the analytic approach and the integrated approach. Our conceptual model and research hypotheses follow. Finally, we expose the research findings and their discussion.

The Generic Decision Making Process of Investments in IT

As it will be discussed in this part, most studies dealing with IT are centered on the evaluation of investments rather than on the related decision making process. Decision taking is largely explored in the science of decision approach. Hence, there is only a limited number of studies that specifically investigate the procedures that organizations apply to the process of development, evaluation, choice and management of investment projects in IT. Consequently, the discussion of the decision making process related to investing in IT is centered on a review of these studies.

Farragher et al., (1999) suggest that sophisticated decision taking requires a structured process. Relying on the eight steps proposed for the investment system initially modeled by Gallenger (1980) and Gordon and Pinches (1989), with other documented methodologies (Czernick and Quint, 1992; Hogbin and Thomas, 1999; Mc Kay et al., 2003), the decision making process of investments in IT could be summed up in four distinct steps:

1.Analysis and planning;

2.Evaluation of costs and benefits;

3.Selection and implementation;

4.Post implementation evaluation.

These steps are not necessarily sequential. In fact, Johansen et al., (1995) recommend that certain steps be reiterated according to the project’s complexity and nature, if the decision making process is to be efficient.

Analysis and Planning

A literature review proves that most research works dealing with investments in IT are only focused on evaluation techniques and methods, rather than on the whole decision making process, one step of which being evaluation (Irani et al., 1998; Ballantine and Stray, 1999; Irani, 2002; Cronholm and Goldkuhl, 2003). Nevertheless, the majority of these studies state that analysis and planning must be rigorously carried out before investment decision is taken.

The literature related to strategic planning and development of IT has flourished since the early 1980s, with the development of computer science. There is a benefit to be drawn from the use of strategic IT to realize competitive advantages, increase market shares, improve organization processes and increase staff productivity (Banker and Kauffman, 1991; Baker, 1995; Earl, 1989; Robson, 1997; Fidler and Rogerson, 1996). Khalifa et al. (2001) suggest that there are two important reasons behind a purchase act and the replacement or the modification of IT, these are the change of organizational processes and the improvement of organizational efficiency and effectiveness. Therefore, it would be reasonable to suppose that most investments are performed through a detailed study and a clear vision, and that benefits, such as the competitive edge or any other advantage, are well understood. Unfortunately, this is rarely the case, as it has been indicated in most studies.

Hogbin and Thomas (1994, p.36 cited by Willcocks, 1996) state a few problems that firms face during analysis and planning activities for investment decisions in IT:

1.Planning is of little use in an investment decision in IT;

2.There is a lack of clear procedures linking the organizational planning and the project evaluation activities;

3.The choice of projects is carried out according to financial criteria rather than to the firm’s needs;

4.There is no synchronization between the organizational process and the planning cycles of projects investing in IT.

Nevertheless, the IT literature also refers to the difficulties of planning and elaborating a strategic orientation for investments in IT, so as to meet organizational imperatives. On the one hand, the genuine intention to invest in IT might not always be recognized in advance (Bacon, 1994). On the other, the consequences of investments made in IT are not always easy to predict (Clemons, 1991; Maritan, 2001).

Relying on these notions, Robson (1997) equally asserts that when organizations are not able to efficiently allocate resources to invest in ITs, it is hard for them to acquire efficient ITs strategies. In fact, empirical results show that the benefits drawn from IT are higher in organizations where IT strategies meet organizational objectives, than in those where they do not (Tallon et al., 2000).

Taking the discussion above into account, it is clear that analysis and planning are crucial to the investment process. Farragher et al., (1999) propose a proactive approach to the selection of investments in IT. They start with a thorough analysis of the organizational strategies, which comprises the determination of organizational advantages in terms of market, products and services.

Other researchers equally propose a series of « The Best Practices » including a deep implication of the parties involved in the analysis and planning activities (Agle et al., 1999; Berman et al., 1999; Whitley, 1999) and the adoption of an iterative approach in decision taking (Ramenvi and Sherwood-Smith, 1999). They think that the advantage of introducing the main parties concerned in the initial phase allows organizations to take investments into account in their agendas rather than focusing on investment criteria. Apart from being participative, Farbey et al. (1999) add that, to be effective, decision taking should also be iterative. More particularly, the practicality of the investment should be evaluated at each step of its life cycle.

Evaluation of the Cost and Benefits of Investments

Evaluation is a crucial stage in the decision making process, as far as the term “evaluation” is sometimes used in the literature as a substitute referring to the whole decision making process. Academicians have pointed at the importance of the evaluation of investments for several decades (Gitman and Forester 1977; Gallenger, 1980; Canada and White, 1980; Pinches, 1982). The continuous concern given to evaluation seems to be a logical consequence, with the rising worry about the capacity of classical evaluation techniques to determine the real value of an investment project in ITs (Farbey et al., 1999; Imi et al., 1998; Khalifa et al., 2001; Proctor and Canada, 1992).

The aim of evaluation is to determine whether the investment can meet the specific needs identified in the analysis and planning stage (Tallon et al., 2000), that is the evaluation of financial impacts, of the potential value of firms and of the risks involved. It is at this stage that organizations assess investments according to their strategic, financial and technical objectives, and compare them with available investment opportunities. However, while theoretically evaluation provides organizations with an opportunity to measure the potential value of investment initiatives in IT, studies have shown that it often has negative effects as it is limited to budgetary constraints (Irani et al., 1998; Primrose, 1991; Farbey et al., 1999).

Such an attitude often leads to an occasional assessment of the informal evaluation process, and allows project executives —who are totally engaged toward the project’s success- to seek potential advantages and reduce costs (Irani and Love, 2002; Irani et al., 1997; Nutt, 1999). It is often after implementation that the realization appears as a good investment, or as below expectations.

To deal with these worries, several evaluation approaches have been developed through the years. Some researchers, including Parker et al. (1998), Rayan and Harisson (2000), offer a more refined classification of the benefits of ITs, these could be quantitative, almost quantitative, and qualitative. Nevertheless, most of the approaches cited in the literature could simply be classified as quantitative and qualitative.

Investment Selection and Implementation

Following the evaluation stage in the decision making process comes the selection and implementation of the project that corresponds to the evaluation outcomes. Project selection is a relatively simple process, as long as investment is able to meet the strategic, financial and technical objectives. However, all these aspects may need more than what is satisfied by one investment project in IT. Therefore, the managers’ judgment is often necessary to determine the compromises between objectives, values and stakes.

Even when the investment opportunity is perceived as favorable, the project’s success could still be more complex, seeing the lack of certitude around implementation deadline and quality. Some researchers, such as Farbey et al., (1999), maintained that evaluation during the implementation phase is important, because it allows detecting potential problems and provides a quality control mechanism.

Yet, the way evaluation is performed during the implementation process remains less known in the now available literature. Except the study of Farragher et al. (1999) which notes that 80% of the organizations questioned build action plans and appoint project executives to monitor implementation.

Post Implementation Evaluation

The investment process does not stop with the implementation of the IT project. The post-implementation review plays a significant role in the set of investments in IT. Once a technology is implemented and put into work, the post implementation evaluation provides the management with an opportunity to make sure the project is carried out as planned. The system allows checking the impact of investments in IT in terms of organizational value, and benefits and costs. The impact of IT implementation is equally compared to original estimates, and any discrepancy is investigated. This evaluation process is performed in a continuous way, until the organization presents some possible alternatives or replacement options. Apart from determining the performance degree of the predictions realization system, post evaluation also makes the organizational learning easier. Ramenvi and Sherwood-Smith (1999) suggest that evaluation be formative if firms want to draw lessons about the intrinsic difference between formative and summative evaluation. On the one hand, they think that summative evaluation, which stresses the results and impacts of evaluation, is not sufficient to help firms learn to better manage their future investments. On the other hand, formative evaluation is not only limited to the collection of statistics, but also concerns the way the project is carried out and its performance. Besides, there is the preoccupation with understanding the subjective opinions of the parties involved in the investment project in IT. Identifying these subjective worries and questions raised by these parties allows organizations to learn from past mistakes and missed opportunities (Ramenvi and Sherwood-Smith, 1999).

Yet, previous studies have suggested that despite the importance of the post-implementation evaluation phase in the decision making process, it remains one of the most neglected phases (Willocks and Lester, 1997). More particularly, post implementation evaluation is a crucial phase because it represents a way to compare the expected benefits and the realized benefits. Patel and Irani (1999) assert that most decision takers hold the hypothesis according to which the necessary information could be entirely identified and defined in advance. Consequently, the requirements of the system based on this hypothesis are taken to be complete and accurate. However, the project’s dynamism is often a source of incertitude and change. Hence, the orientation of the objectives or the functionality of an implementation system could deviate of what was initially planned. That’s why, the post-implementation evaluation is very important in determining the outcomes of an investment.

Post evaluation also allows a significant learning process concerning the organizational performance resulting from the adoption of IT. Meyers et al. (1998) equally foresee that evaluation is essential to an efficient management and to the improvement of the functioning of the information system.

Besides, the information generated by post evaluation is not only useful as a feedback about the implemented technology; it is also used upstream to improve future investment perspectives in IT (Farbey et al., 1999; Kumar, 1990). Therefore, we can conclude that post implementation evaluation of investments:

1.is on the whole not performed extensively;

2.is mainly summative;

3.is conducted informally, rather as an exception than as a rule.

It is clear that post implementation evaluation does not go beyond measuring technical efficiency to cover learning and the effects of organizational change. Some researchers think that post implementation evaluation was considered a justification that needs to be provided, rather than a positive action that would favor a better understanding, a mastery of the project, the satisfaction of users, and the management of benefits (Farbey et al., 1999; Irani and Love, 2002). With these preoccupations, a rising number of formative measures have been developed, aiming at a better understanding and a more effective management of the benefits of IT.

Evaluation of Investments in IT

The notion of IT/IS evaluation is frequently used in an inaccurate way. Before implementation, evaluation is the point at which the system’s potential value is examined. During implementation, evaluation assesses the quality of the investment in IT. Once the project is implemented, evaluation represents the performances and the impact on organization (Farbey et al., 1999). In this respect, evaluation plays many roles that extend from evaluating projects to controlling quality and managing benefits during the investment cycle.

In the present literature, evaluation is often pointed at as a process allowing identifying the potential benefits of an investment opportunity through quantitative or qualitative means (Ballantine and Stray, 1999). Thus, some researchers (such as Farbey et al., 1999) state that this definition of evaluation is much more centered on the evaluation of the project. Therefore, evaluation is often considered as a justification process of the project. Ward et al., (1996) define evaluation and benefit management as “The organization and management process that allow the potential benefits raised by the use of ITs to be effectively realized.”

Even though this little theoretical definition accounts for the role of evaluation in the decision making process, the definition of Farbey et al. (1999) of the evaluation of IT is more operational: “A process, or a set of parallel processes, that take place at different times or without interruption, to investigate and make clear, quantitatively or qualitatively, all the impacts of an IT project, and the program and strategy of which it is part.”

Consequently, the understanding of the different evaluation approaches during the investment cycle allows firms to better select, control and measures their investments in IT.

In their study, Renkema and Berghout (1997) provide a thorough list of 65 quantitative and qualitative techniques used in the evaluation of investments in ITs. Seeing the number of methods available, choosing the appropriate management technique or techniques has become a real challenge (Patel and Irani, 1999). With time, a number of classifications have been proposed to classify the different methods of evaluation. The following paragraphs deal with some of the most representative classification approaches.

Robson (1997) conceived a classification scheme that relies on the principle that the objective of evaluation should be modified according to the objective of investment. For instance, if an investment is assessed according to its financial impact, techniques such as VAN and ROI are judged as adequate. On the other side, if an investment is evaluated according to its strategic impact, techniques such as success key factors are more appropriate.

Renkema and Berghout (1997) have elaborated a system that is slightly different. Their classification is centered on the nature of evaluation techniques, rather than on the types of investments that are the focus of Robson’s evaluation. Thus, they state four basic evaluation approaches, one is quantitative while the others three are qualitative:

- The financial approach (for instance, VAN, ROI, TRI)

- The multi-criteria approach (for instance, the information economy model)

- The ratios approach (for example, ROM, ROA, ROE)

- The portfolio approach (for example the Bedell’s method)

Irani et al., (1997), later adapted by Patel and Irani (1999) and Irani and Love (2002), have proposed an approach that takes into consideration both investment types and characteristics of evaluation techniques. On the whole, they identified 18 evaluation methods and classified them into four distinct approaches:

- An economic approach (for example, DCF, ROI, and recovery period)

- A strategic approach (for example, competitive edge and CSF method)

- An analytical approach (for example, risk analysis and value analysis)

- An integrated approach (for example, the information economy model and Prospective Control Panel).

Compared to the evaluation approaches identified by Renkema and Berghout, this classification offers a better degree of orientation, to which techniques could be applied according to investment types. With time, more researchers, such as Grover et al., (1998), have started to explore the relation between types of investments and the choice of evaluation techniques.

Conceptual Model and Research Hypothesis

Conceptual Model

The literature review has revealed a number of questions related to the present research on the decisions to invest in ITs. Three major limitations have been identified: 1) Most research works tend to focus on evaluation rather than on the process of decision taking in investment in IT; 2) present evaluation theories and techniques presented by researchers and which are currently applied by organizations are not clearly established; and 3) there is a lack of empirical proofs about the reasons behind the use of particular evaluation techniques and the way they are applied in the organization’s specific context.

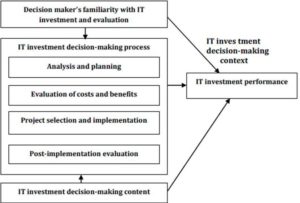

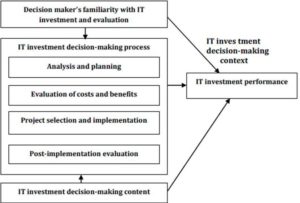

The three limitations mentioned above make up the basis for the problem zones that this research will explore. Decision taking concerning investing in IT is often considered a structured process going from analysis and planning to evaluation and post implementation management (Farragher et al., 1999). As the study stresses the exploration of the whole decision making process, a theoretical framework that is able to capture the content, process and context aspects of evaluation seems appropriate. The evaluation framework proposed by Serafeimidis and Smithson (1996 and 1999) is a framework that successfully identifies the three aspects of decision taking in IT and the interdependencies between them.

The results of the evaluation of investments in IT, or the whole process of generic decision taking depend on the interactions between the evaluation context, the decision making process and the decision taking criteria. Relying on the framework of Serafeimidis and Smithson (1999), our simplified conceptual model is presented as follows:

Figure 1: The Proposed Conceptual Framework

Research Hypotheses

In this study, several variables will be examined according to their association with the process’s efficiency. These are the following: the evaluation techniques used, the perceived efficiency of these techniques, the existence of formal procedures for the higher stages of investment decisions in ITs, and the decision criteria used in this investment. The following paragraph will discuss these variables and the proposed hypotheses.

As stated in the previous sections, the literature has asserted that for investment decisions in IT to be taken conveniently, quantitative as well as qualitative evaluation techniques should be included in the evaluation (Pattel and Irani, 1998; Ballantine and Strong, 1999). Some qualitative factors, such as the improvement of quality, are often hard to quantify directly and to express their values explicitly. Consequently, quantitative methods stress financial measures and are not likely to identify the costs and benefits relative to the adoption of ITs. Besides, while the increasing importance of non financial techniques is often reported in the literature, there are still questions about the use of these techniques for a better efficiency of the decision making process. Therefore, hypotheses H1a to H1c are developed to deal with these questions.

H1a: The use of multiple evaluation techniques is related to the efficacy of the adopted evaluation techniques.

H1b: The use of multiple evaluation techniques is related to the efficacy of the adopted decision making process.

H1c: The perceived efficacy of the evaluation techniques is related to the efficacy of the adopted decision making process.

Although the choice of appropriate evaluation techniques to evaluate investment in IT is important, the adoption of IT also implies other actions, such as complex and systemic planning, coordination, measurement and monitoring of activities (Irani and Love, 2002; Sharif and Irani, 1999; Kettinger and Lee, 1995). However, the data in the literature suggest that the scope of these activities seems to be limited (Kumar, 1990; Hallikainena and Nurmimaki, 2000). To determine whether a detailed decision making process, which comprises projects and post implementation evaluation, has a significant impact on the process’s efficiency, the following hypothesis is proposed:

H1d: The existence of a formal procedure for the higher stages of the decision making process of investments in IT is linked to the efficiency of the decision making process adopted.

Similar to the use of multiple evaluation techniques, the use of multi criteria methods during the evaluation of investments in ITs has generally been regarded by many authors as wished (Bacon, 1994). As discussed in the previous paragraphs, it is often better that criteria for investment decision be developed to support the implicit and explicit organizational objectives. Therefore, hypothesis H1e is developed to study the association of the decision criteria used and the efficiency of the decision process adopted by organizations.

H1e: The use of several types of decision criteria is linked to the efficacy of the decision making process adopted.

In the preceding paragraphs research hypotheses were developed to check the relation between the variables related to the decision process and the decision content of investments made in IT, and the efficiency of the decision process. The objective in the following paragraphs is to investigate the relation between these variables and the performance of investments made in IT.

The decision taking activities, criteria, and techniques refer to five variables previously defined, which are: the evaluation techniques used, the efficiency of the evaluation techniques used, the existence of formal procedures for the higher stages of the decision making process, the efficiency of the global decision making process and the decision criteria used in investments in IT. The following paragraphs will discuss these variables and the proposed hypotheses.

One of the most important criticisms directed at the traditional evaluation methods for investments in IT is their incapacity to thoroughly appreciate the consequences of the project’s benefits and cost. Researchers have called to put a stronger emphasis on the use of non economic measures in order to get the complexity of the benefits and cost of IT. However, researchers in information systems wonder whether the improvement and development of new evaluation methods have effectively led to their adoption. A related question is to know whether the adoption of such evaluation techniques has led to a better performance of investments in IT. Answers to these questions are tested by the two following hypotheses:

H2a: The use of multiple evaluation techniques is linked to the perception of the best performance of the implemented IT investments.

H2b: The efficacy of the adopted evaluation techniques is linked to the perception of the best performance of the implemented IT investments.

It has been suggested in H1d that there may be a possible relation between the existence of a formal procedure for the higher stages of the decision making process in investments in ITs and the perceived efficiency of the adopted decision making process. It has also been suggested that the existence of a formal procedure is linked to the implemented performance system. The reason is that a formal decision making process, the structure, activities and responsibilities of which being well defined, would provide the best investment decisions in ITs, and thus lead to the creation of a more performing system. Hence, the two following hypotheses are developed:

H2c: The existence of a formal procedure for the higher stages of the decision making process in investments in IT is connected to the perception of higher performance of the implemented IT investments.

H2d: The efficacy of the adopted decision making process is linked to the perception of the best performance of the implemented IT investments.

Generally, the selection of the criteria for investment decision in IT often depends on the objective of these IT. Yet, the benefits of IT are not always explicit and some indirect benefits could be possible following the adoption of IT. Therefore, it has been proposed that, if all the investments in IT are different, then including the three types of investments, that are strategic, informational and transactional, will lead to a better global performance of IT. Hence, reaching this objective requires the passing of the test of the following hypothesis:

H2e: The use of several types of decision criteria is linked to the perception of the best performance of the implemented investments in IT.

It is an established fact that the decision taker’s familiarity with the pertinent theories and techniques to evaluate investments in IT often plays a key role in the sophisticated decision taking in investments in IT (Bronner, 1982; Weil, 1992; Nutt, 1997 and 1999; Thong, 1999; Lindgren and Wieland, 2000). However, what remains a great deal unknown, is the extent to which the decision taker’s familiarity refers to the process, content and results of the decision to invest in IT. Do the decision takers who are deeply familiar with the appropriate theories and techniques introduce more sophisticated methods in their decision taking tasks? Do such decision takers perceive the decision processes they adopt as more satisfying than those adopted by others who have a lower degree of familiarity? The following hypotheses have been established to answer these questions:

H3a: The decision maker’s familiarity with the recent literature of investment decision making in IT is linked to the use of multiple evaluation techniques.

H3b: The decision maker’s familiarity with the recent literature of investment decision making in IT is linked to the efficiency of the evaluation techniques used.

H3c: The decision maker’s familiarity with the recent literature of investment decision making in IT is linked to the existence of a formal procedure for the high stages of the decision making process.

H3d: The decision maker’s familiarity with the recent literature of investment decision making in IT is linked to the efficiency of the decision making process adopted.

H3e: The decision maker’s familiarity with the recent literature of investment decision making in IT is linked to the use of several types of decision criteria.

H3f: The decision maker’s familiarity with the recent literature of investment decision taking in IT is linked to the performance of the implemented investments in IT.

Methodological Framework

Research Sample

The sample is made up of 33 Tunisian industrial firms; a panel that allows generalizing the results obtained. As a matter of fact, we have had to face the aversion of some firms to information communication and of some others to participation to the study for lack of time or interest. This lack of collaboration has constituted a major limit to the sample, which has been reduced to only 33 respondents; that is, a 40% answer rate.

Data Collection

This data collection mode is marked by three steps. First, the initial construction of the questionnaire which comprises five fundamental parts : the organization’s profile, generalities on the decision making process in IT investments in its planning and feasibility stages, its evaluation stage, its selection, implementation and post implementation phases.Then, we performed a pre-test, which is an indispensable step that is likely to improve the questionnaire’s quality noticeably. In fact, this is a preliminary validation of the questionnaire, before it is definitely submitted to respondents. Finally, we used an inquiry method whereby the questionnaire is sent via e-mail, auto-administered, or conducted face to face.

Measuring the Reliability of Scales

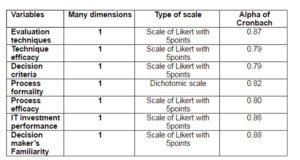

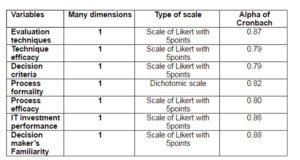

Apart from being valid, the measurement of a variable must be reliable in order to be useful and to have a good output and stable results (Stratman and Roth, 2002). Reliability is particularly important, as it evaluates the point to which a multiple-item scale is actually a measure of the same construction. There are several statistical methods to determine the internal reliability of measurement items, the most frequently used one is the Cronbach alpha (Sedera and others, 2003a; Santos, 1999).

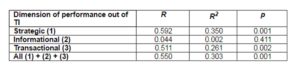

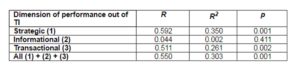

The table below shows the reliability of measuring scales. Some items have been omitted from the original scale to improve its reliability. However, by using pre-tests, we made sure that the content’s validity had not been threatened by the omission of these items (Stratman and Roth, 2002). It can be noticed that all the scales show a sufficiently high reliability.

Table 1: Scales of Measurement of the Variables and the Coherence of the Items

Analysis Method

To analyze the results, we relied on the techniques of descriptive statistics and inferential statistics. A looser significance level (α) of 0.05 was chosen to improve the global power of the hypotheses statistical analysis. Besides, to evaluate data, normality was no less a preliminary condition to conduct inferential statistical analyses. For the statistical inference techniques, the study used the following specific method: the chi2 test, the Pearson correlation coefficients (high correlation if r = ± 0.5 to ± 1.0, average correlation if r = ± 0.3 to ± 0.49, and low correlation if r = ± 0.1 to ± 0.29), multiple regression, the Kruskal-Wallis test, the Student (t-test) and the ANOVA analysis.

Results and Discussion

Results

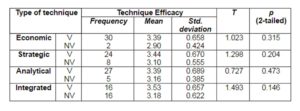

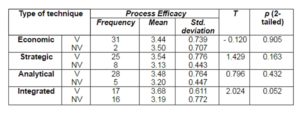

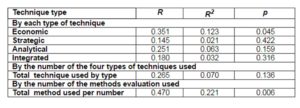

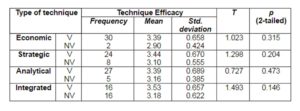

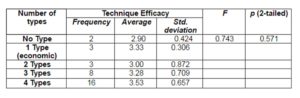

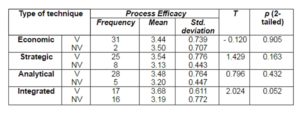

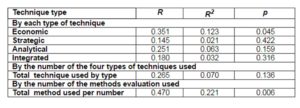

The existence of a relation between the two variables in H1a has been tested in three steps. For the first part of the hypothesis test, a series of t-tests has been performed between “valid technique” (V) and “non valid technique” (NV), for the four types of evaluation techniques. Results are presented in table (2).

Table 2: Technique Efficacy by Individual Evaluation Technique Type

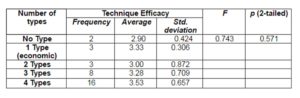

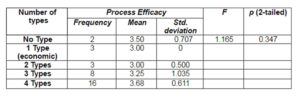

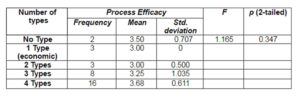

The second part of the test, the ANOVA analysis, was used to compare the average levels of the techniques efficiency. It is based on the number of types of evaluation techniques that is considered valid by the respondent. Results are presented in table2.

Table 3: Technique Efficacy by Multiple Evaluation Technique Types

In the third part of the test, we calculated the Pearson correlation coefficient for the number of any evaluation techniques used and considered valid by the respondents. The Pearson correlation coefficient shows a non significant relation between the two variables (r = 0.320, n = 32, p = 0.074, bilateral). The calculation of the determination coefficient (r2) equals 0.10, which shows that only 10% of the variability in the efficiency of the adopted evaluation techniques could be accounted for by the use of multiple evaluation techniques to assess investment opportunities in IT.

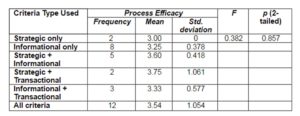

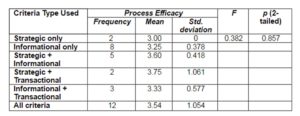

Hypothesis H1b has equally been tested, like H1a, in three steps. The results of the t-test are presented in table 3. Thus, we can conclude that organizations using strategic, analytical, or integrated evaluation techniques have obtained a higher level of technique efficiency than those which did not. These differences were not statistically significant.

Table 4: Process Efficacy by Individual Evaluation Technique Type

The results of the ANOVA analysis (table 4) show that the additional use of strategic, analytical and integrated techniques of economic evaluation generally increase the efficiency level of the process. Nevertheless, on the whole, this difference was not statistically significant.

Table 5: Process Efficacy by Multiple Evaluation Technique Types

The results of the bi-varied correlation show a significant relation between the two variables (r = 0.427, n = 33, p = 0.013, bilateral). The calculation of the determination coefficient r 2 shows that 18% of the variability in the process’s efficiency could be accounted for by the use of multiple evaluation techniques to evaluate investment opportunities in IT. The conclusion for H1b is that the use of several types of evaluation techniques did not produce a significant variation in the efficiency level of the decision making process. Besides, the use of multiple evaluation techniques, whatever their type is, leads to an important change in the process’s efficiency. Therefore, H1b is not confirmed for the use of several types of evaluation techniques, but is confirmed for the use of several evaluation methods.

The two preceding hypotheses have tested the relations between the use of multiple evaluation techniques and the two efficiency variables. Hypothesis H1c explores the association between the two variables that are dependent on H1a and H1b, i.e., the efficiency of the adopted evaluation techniques and the efficiency of the decision making process. The results of a bi-varied correlation have shown that there is a strong and significant relation between the two variables (r = 0.542, n = 32, p = 0.001, bilateral). The calculated r2 suggests that 29% of the variance of the decision making process relative to investments in ITs could be explained by the efficiency of the adopted evaluation techniques. Hence, hypothesis H1c is upheld.

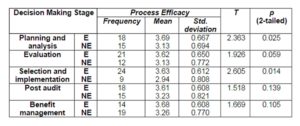

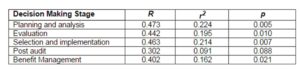

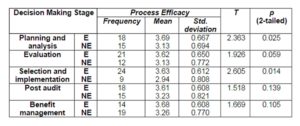

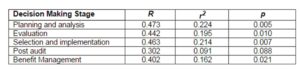

Association between the two variables in H1d has been tested in two parts. First, two groups of organizations have been defined according to the existence of a policy or formal procedure for each of the five stages of the decision making process: planning and analysis, evaluation of cost and benefit, selection and implementation, post implementation audit and benefit management.

It is then possible to compare the evolution of the dependent variable between the two groups. Second, five groups of respondents have been defined as follows:

- Organizations having no formal procedures for the five steps,

- Organizations having formal procedures for the first three out of five steps,

- Organizations having formal procedures for the last three out of five steps,

- Organizations having formal procedures for the two important steps that are pre and post investment,

- Organizations having formal procedures for the five steps of decision taking.

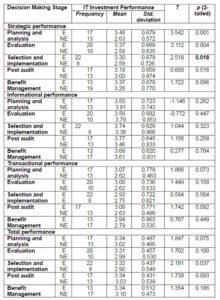

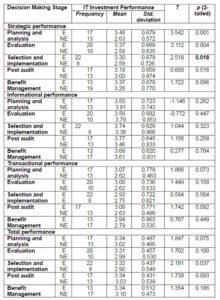

A series of t-tests has been performed in the initial part of the test; findings are presented in table 5. As indicated, the average of the process’s efficiency has always been, as expected, higher in organizations where formal procedures have been implemented. In particular, differences in the process’s efficiency are judged as statistically significant between organizations with and organizations without formal procedures for the analysis, selection and implementation stages.

Table 6: Process Efficacy by the Existence of Individual IT Investment Stage

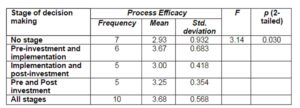

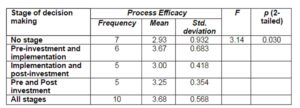

The calculated values of the Eta-squared (h2) for both stages have been respectively 0.166 and 0.195, which suggests the wide scope of the difference in the process’s efficiency. The ANOVA analysis has been used in the test’s second part, to compare the average levels of the process’s efficiency among the five groups of respondents. Results are presented in table 6. They show that organizations having formal procedures for the five stages of the decision making process have scored the highest average of the process’s efficiency, as expected. The size effect shows that 31% of the process’s efficiency variance was represented by the existence of formal evaluation procedures of the decision making process. A Post-hoc analysis using a Tukey HSD test revealed that the average score for group 1 is significantly different from group 5 at the level of 0.036. All the other groups do not significantly differ from each other.

Table 7: Process Efficacy by Process Formality

The conclusion for hypothesis H1d is that there were significant differences in the efficiency averages of the process’s efficiency, between organizations having formal procedures and organizations not having any of them, for the planning and analysis, and selection and implementation stages. Besides, the average scores of the process’s efficiency were significantly different for organizations that did not have any formal procedures and those that did have some for the whole set of the five stages of the decision making process. Consequently, hypothesis H1d is upheld.

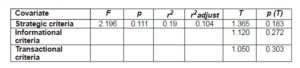

H1e hypothesis test comprised two parts. An ANOVA procedure was first used to compare the average levels of the process’s efficiency of the seven groups of respondents. However, as no group had used transactional decision criteria, group 3 was omitted from the analysis.

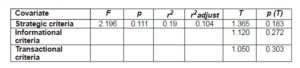

The results presented in table 8 reveal that there is no significant difference in the process’s efficiency, as observed between the groups according to preference criteria.

Table 8: Process Efficacy by Evaluation Criteria Preference

In the test’s second part, a standard regression model was used to determine if the use of decision criteria of the three types is an important predictive factor in dealing with efficiency. Table 9 displays the results. They show that regression has not been well adjusted (r2 = 19%, r2adj = 10.4%) and that global relation is not significant (F (3.28) = 2.196, p= 0.111). Consequently, the conclusion is that the use of several types of decision for investment in IT did not lead to a significant variation in the efficiency of the whole decision making process. Hence, hypothesis H1E is not confirmed.

Table 9: Standard Multiple Regression of Evaluation Criteria on Process Efficacy

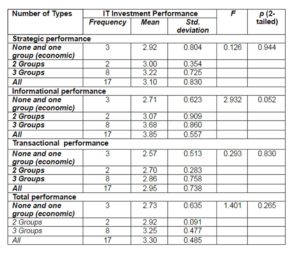

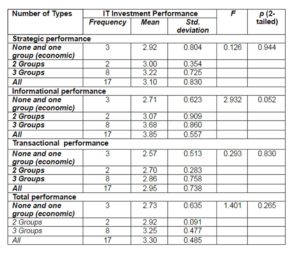

Table 10 suggests that performance in the three performance dimensions and in the global performance was constantly higher when other non economic evaluation techniques were used. Such a variation is not statically significant.

Table 10: IT Investment Performance by Multiple Evaluation Technique Types

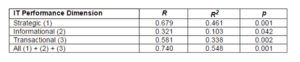

However, variation is significant in the dimension of the informational performance, at 0.052. All the other groups do not differ significantly from each other. Results presented in table 11 indicate that positive and significant relations exist between the variables of the test. Besides, the value of r2 suggests that the scope of the effect of the independent variable was large on the dependent variables.

Table 11: Correlation of Number of the Individual Evaluation Techniques Used and IT Investment Performance

According to the results presented in table 10 and 11, we can conclude that the use of several types of evaluation techniques did not result in a significant variation of the ITs’ performance. Moreover, the use of multiple evaluation methods, whatever their type is, led to important differences in the performance levels of IT in the three dimensions, as well as in the global performance of IT. Hence, hypothesis H2a is not confirmed for the use of several evaluation techniques, but it is confirmed for the use of several evaluation methods whatever the type of the technique is.

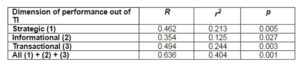

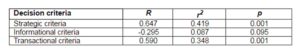

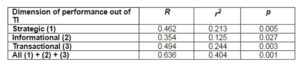

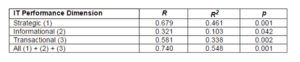

For hypothesis H2b, table 12 presents the results of a bi-varied correlation between the independent variables and the three performance dimensions in ITs, as well as the global performance in IT. Results presented in table 12 show that there are significant correlations between the variables of the strategic and transactional performance and the global performance.

Table 12: Correlation of Technique Efficacy and IT Investment Performance

The calculated values of r 2 indicate that the efficiency of the evaluation techniques adopted accounted for 35% of the strategic performance variance, for 26.1% of the transactional performance variance, and for 30.3% of the global performance variance of the implemented investments in ITs. It is concluded that hypothesis H2b is confirmed for the strategic, the transactional and the global performance of investments in IT.

For hypothesis H2c, the results of the t-test are presented in table 13 which shows that the only significant variance in the performance of IT exists in the strategic dimension between the organizations which have formal procedures for planning, analysis, evaluation, selection and implementation, and those which do not have any (h2 is respectively of 0.309, 0.257, and of 0.185). It seems that meticulous planning of pre-investment and evaluation activities have allowed a better realization of strategic benefits from IT. These activities have not resulted in a major improvement of the informational or transactional performance of IT. In terms of global performance of investments in IT, the existence of a formal procedure concerning IT selection and implementation was judged as being the only significant determinant (h2 = 0.146). It seems that the effect of a formal decision making process in IT investment has been limited in scope.

Table 13: Performance of the IT Investments and Formal Existence of Procedures

Results of the ANOVA analysis show no significant variation in the performance of ITs, in each of the three dimensions, nor in the global performance of IT among the five groups of respondents.

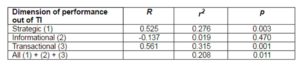

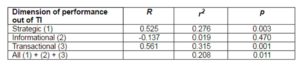

Seeing these results, it is concluded that there is a significant variance of the performance of investments in IT in the strategic dimension, among organizations having a formal procedure for the planning, analysis, evaluation, selection and implementation stages. However, there is no significant relation between the existence of formal decision taking and the global performance of investments in IT. The results of hypothesis H2d are presented in table 14 which suggests that the efficiency of the process is strongly linked to the performance of ITs in the three dimensions, as well as to the global performance of investments in IT. Yet, value r2 for the informational performance is weaker than the one for the other dimensions of performance. Seeing these results, hypothesis H2d is confirmed.

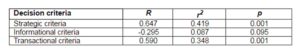

Table 14: Correlation of Process Efficacy and IT Investment Performance

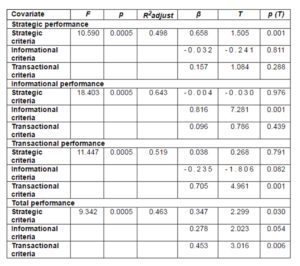

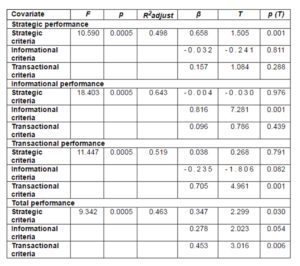

Table 15 displays the results of hypothesis H2e. Thus, the correlation coefficients show a strong and positive relation between the use of each of the three types of decision criteria and the performance of IT in their respective dimensions. Besides, the use of strategic and transactional criteria has considerably improved the global performance of investments in IT, while the use of informational criteria has a slightly significant effect of 0.054. In conclusion, there is a strong and significant relation between the use of each of the types of evaluation criteria and the corresponding performance dimension. Hypothesis H2e is confirmed.

Table 15: Standard multiple regression of evaluation criteria on IT investment performance

As mentioned in Table 16, the only significant correlations were found between the familiarity of the decision taker and on one part the use of economic techniques and on the other the use of several evaluation methods, whatever the type of evaluation technique. Value r2 suggests that 22.1% of the variance of the use of multiple evaluation methods, of whatever type, was accounted for by the familiarity of the decision taker. Hence, H3A is not confirmed for the use of several types of evaluation techniques, but confirmed for the use of several evaluation methods regardless of the type.

Table 16: Correlation of Decision Makers’ Familiarity and Evaluation Techniques

For hypothesis H3b, the analysis of the b-varied correlation shows that there was no significant relation between the two variables (r = 0.165, n = 32, p = 0.366, bilateral). The value of r2 suggests that the knowledge of the decision taker accounted for 2.7% of the variance of the efficiency of the adopted evaluation techniques. Thus, hypothesis H3b is invalidated.

We can conclude from table 17 that hypothesis H3c is confirmed for the five stages of the decision making process, except for the post audit stage. This implies that the higher the degree of familiarity, the more a formal procedure is followed by decision takers in their investment decisions in IT. The procedure concerns pre-investment planning, and the management of IT post-implementation activities.

Table 17: Correlation of Decision Makers’ Familiarity and the Existence of Formal Evaluation Procedure

The relation between the decision taker’s familiarity and the process’s efficiency is investigated in hypothesis H3d. The analysis through a bi-varied correlation reveals that there was no significant relation between the two variables (r = 0.296, n = 33, p = 0.095, bilateral). The value of R2 of 8.8% suggests that the effect of the decision taker’s familiarity on the dependent variable was small. Hence, hypothesis H3d is invalidated. The results of table 18 suggest that there is a positive and significant relation between the independent variable and the use of strategic and transactional decision criteria. Hypothesis H3e is therefore confirmed for the use of these two types of evaluation criteria.

Table 18: Correlation of Decision Makers’ Familiarity and Use of Evaluation Criteria

It seems that a rise in the decision taker’s familiarity reduces the dependence on the use of informational criteria. Rather than focusing on investments in IT aiming only at the management of information, decision takers are more familiar with theories and methods to evaluate strategic investments offering a long term value, and transactional investments offering quantifiable, organizational benefits. According to table 19, we can conclude that the decision taker’s familiarity was significantly correlated with the performance of investments in ITs, in the strategic and transactional dimensions, as well as with the global performance of these investments. Consequently, hypothesis H3f is confirmed for these two dimensions of performance, and for the global performance of investments in IT.

Table 19: Correlation of Decision Maker’s Familiarity and IT Investment Performance

Discussion

Investments in ITs and Efficiency of the Decision Making Process

In this paragraph, several variables and their relation with the process’s efficiency have been examined. These are:1) the evaluation the techniques used; 2) the efficiency of the adopted evaluation techniques; 3) the existence of formal procedures for the different stages of the decision making process; 4) the decision criteria used for investments in ITs. The following paragraphs will investigate each of these variables in turn.

The collective results of the test of hypotheses H1a and H1b suggest that the adoption of the four types of evaluation techniques has improved the efficiency of the methods used and the global decision making process. However, this effect is not statistically significant. The variation in one efficiency variable or the other is not significant among organizations which considered that only the use of economic evaluation techniques is useful, as compared with those which considered a combination of different types of techniques to be a better alternative. In other words, the adoption of several types of evaluation techniques has little effect on the identification of important costs and benefits, or on the satisfaction level with the global decision making process related to investments in IT.

A possible explanation is that, although the use of non- economic measures is generally admitted, their value is considered secondary compared to the value of economic techniques. Thus, the other techniques do not significantly improve the decision making process related to investments in IT. The fact that four out of the five most widely used evaluation methods are economic methods equally supports this point of view. The analysis results also show that there is a strong and significant association between the process’s formality and its efficiency. The difference in the process’s efficiency is particularly important between firms having formal procedures for the high stages of the decision making process related to investments in IT, and those not is having any. It is equally demonstrated that the process’s efficiency is more or less higher for organizations having formal rules for the analysis, planning, selection and implementation stages, than for others. These results are expected, seeing the fact that planning and implementation of IT should allow improving the decision’s results and the quality of the systems that are implemented. Besides, no significant variation in the process’s efficiency has been found either in the evaluation phases of the costs and benefits or in the post implementation phase. A probable explanation is that if the systems are mainly implemented for commercial needs, then the pre-investment and post-implementation evaluations could not be considered as a requirement for a successful IT adoption.

The selection of decision criteria and its relation with the process’s efficiency has equally been examined. Analysis shows that the use of several types of decision criteria has no significant effect on the satisfaction level of the decision taking results or the adopted decision making process. Although results seem surprising, they are logical if investments as well as their criteria are meant to meet specific ends. The use of additional criteria cannot add a great value to the decision making process, nor can they lead to better decisions.

Investments in IT and Performance of Investments in IT

Five variables and their association with the performance of investments in IT have been examined in this paragraph, namely:

- The evaluation techniques used ;

- The efficiency of the evaluation techniques adopted;

- The existence of formal procedures for the stages of the decision making process;

- The decision criteria used for investments in IT;

- The efficiency of the global decision making process.

The results of hypothesis H2a test suggest that organizations which use several types of evaluation techniques have not reached a significantly high IT performance. Besides, the use of several individual evaluation methods of whatever type resulted in important differences in the three dimensions of performance as well as in the global performance of IT. It has been suggested that if an organization is able to adopt appropriate evaluation techniques to adapt to a particular evaluation context, then the benefits expected from the adoption of IT will be better realized. However, seeing the previous observation according to which there was a strong dependence on economic techniques, such a conclusion is indicative of the value of the traditional financial measures as well as of a lack of applicability of alternative evaluation methods.

As for the efficiency of the evaluation techniques adopted and the performance of investments in IT, there exist strong and significant correlations with the strategic and transactional performance of IT. These conclusions are logical, as a better identification of costs and benefits of IT should lead to more satisfying performances of IT. Moreover, a possible reason for the absence of association between the efficiency of techniques and the informational dimension of the IT performance could be the fact that the adoption of the system is often oriented toward the acquisition of informational benefits. A satisfying performance is expected in this dimension, regardless of the efficiency of the adopted evaluation methods.

The performance of IT does not depend significantly on the existence of a formal procedure for the high stages of the decision making process related to investments in ITs. The absence of association is accounted for by two probable reasons:

(1) The formal procedure of the decision making process considered by the organizations is not actually formally established; and

(2) the formal procedure of the decision making process has not been followed.

Concerning the process’s efficiency and the performance of investments in IT, analyses have stated a significant relation between the two variables for the three dimensions of performance of IT, as well as for the global performance of IT. It is reasonable to improve the performance of the implemented system. The decision criteria used and their scope have influenced the performance results of investments in IT. It has been determined that all three types of decision criteria were strongly and significantly correlated with the performance of IT in their respective dimension.

Besides, the global performance of IT has changed significantly with the use of strategic and transactional decision criteria to evaluate opportunities of investments in IT. It seems that if organizations took measures to determine the strategic alignment between investment opportunities and organizational objectives, the perceived performance of the implementation systems would be higher than that of organizations which did not perform this alignment.

Moreover, the tests of student have revealed the existence of a substantial difference between what was expected and what was realized in the performance of IT for the transactional dimension. It seems that direct financial benefits made thanks to the adoption of technologies are not always realizable. One reason for this could be the fact that although it is easy to suggest benefits to be realized after implementation, it is more difficult to quantify these realizations in total confidence.

The Decision Maker’s Familiarity and Investment Decisions in IT

The effects of the decision taker’s familiarity with the contemporary literature related to decision taking and evaluation on the different aspects of the investment process in IT have been investigated in this paragraph. More precisely, these different aspects are the following:

- The choice of evaluation techniques;

- The efficiency of the evaluation techniques adopted;

- The existence of formal procedures for the stages of the decision making process;

- The decision criteria used for investment in IT;

- The efficiency of the global decision making process; and

- The performance of the implemented IT.

Concerning the choice of the evaluation techniques, findings suggest that the decision taker’s familiarity is positively and significantly associated only to the perceived economic value of the evaluation techniques. These findings seem to refute the opinion of several researchers who think that the techniques of traditional budgeting are not satisfying and must not be used alone in the orientation of investments in IT (Post et al., 1995; Ezingeard et al., 1998).

Besides, it has equally been determined that there is no significant relation between the decision taker’s familiarity with the recent literature and the perceived efficiency of the adopted evaluation techniques. The fact that the decision taker’s knowledge is only significantly correlated with economic evaluation methods accounts for the absence of association between the two variables.

Analyses show that there is a positive and significant correlation between the decision taker’s familiarity and the existence of a formal procedure for the four stages of the decision making process, while the existence of the post implementation evaluation phase is only significant at the level of 0.088. On the basis of these observations, it is reasonable to think that decision makers who were having a high degree of familiarity with the recent developments in the field of theories of decision taking and evaluation of IT are more likely to follow an official investment process, in terms of pre- investment as well as post implementation planning.

As for the decision taker’s familiarity and the process’s efficiency, results state no significant association between the two variables. It seems that a stronger familiarity of the decision takers has little impact on the satisfaction level of either variable of the adopted decision process or the results of the investment decision in IT. Consequently, it is here suggested that an increase in the decision maker’s familiarity cannot —on its own- sufficiently improve the existing practices in investments in ITs. Other factors, such as a change in the internal policy of the organization and the technological environment, can equally be necessary.

The test of the association between the decision maker’s familiarity and the use of decision criteria to adopt IT reveals a strong and significant relation between the two variables for the use of strategic and transactional decision criteria. Besides, correlation with the use of informational criteria has proved negative. Similar results are reached in relation with the decision taker’s familiarity and the performance of investments in IT. In other words, the decision taker’s familiarity correlates significantly with the strategic and transactional performance of IT, whereas there exists a negative correlation with the informational performance of ITs. Results seem to suggest that a better understanding of decision taking theories and evaluation techniques of IT could lead to better considerations of other types of technologies and systems, rather than to investing in those that are only likely to improve information.

Conclusion

This paper presents a review of the current practices in investments in IT and the decision making process. IT play a crucial role in organization. They are implemented or used to support the strategic operations of organizations, and are often relied on to allow the firm to innovate. The adoption of a judicious decision making process for investment in IT not only reduces optimism and prospects, but it also prevents excessive pessimism and the risk of not developing critical systems. Findings show that the factors affecting the process’s efficiency, the use of multiple evaluation methods (of whatever type), the efficiency of the evaluation techniques, and the existence of a formal procedure have proved to be important variables. Nevertheless, the evaluation of IT has often been built on financial measures, and the value of the use of non-economic evaluation techniques is limited in scope. Indeed, no significant relation is found between the value of the adoption of several types of evaluation techniques and the efficiency variables of the techniques or the process. Moreover, while the existence of formal procedures of planning and implementation is significantly linked to the process’s efficiency, no relation was identified for post investment activities. It seems that this success has often been measured through a successful implementation rather than through the effective realization of benefits.

As for the performance of the investments implemented in IT, analyses have shown that the use of multiple evaluation methods (of whatever type), the efficiency of the decision making process, and the decision criteria used are important predictive factors. Yet, the introduction of several types of evaluation techniques and the existence of formal stages has had no significant impact on the global performance of investments in IT. It is asserted that the absence of a formal investment methodology in IT and uniformity in applying this methodology could be key factors that contribute to this little significant relation.

The degree of the decision taker’s familiarity related to the decision making process in IT, the content and the results have also been studied. It was determined that the decision taker’s familiarity has not been correlated in a significant way with the perceived value of the use of non-economic evaluation methods, nor with any of the efficiency variables of the techniques or the process. This continuous dependence on financial methods raises new questions concerning the applicability and the feasibility of theories and evaluation techniques of decision taking that are now available in the literature.

Yet, research has shown that managers were little concerned about their decision taking related to investments in IT. Therefore, a major proposition of this paper is that the quality of a decision does not depend solely on the evaluation techniques used, but equally on the quality of the whole decision making process adopted. It is, thus, useful to examine the process adopted by organizations in the selection of investment opportunities in IT, rather than to focus only on decision criteria and evaluation techniques.

References

Angelou, G. N. & Economides, A. A. (2009). “A Multi-Criteria Game Theory and Real-Options Model for Irreversible ICT Investment Decisions,” Telecommunications Policy 33, Pp. 686—705.

Publisher – Google Scholar

Alter, S. (2004). “Possibilities for Cross-fertilization Between Interpretive Approaches and Other Methods for Analyzing Information Systems,” European Journal of Information Systems (13), pp.173—185.

Publisher – Google Scholar – British Library Direct

Bacon, C. (1992). “The Use of Decision Criteria in Selecting Information Systems/Technology Investments,” MIS Quarterly(16:3), Pp. 335-353.

Publisher – Google Scholar – British Library Direct

Ballantine, J., Levy, M., Martin, A., Munro, I. & Powell, P. (2000). “An Ethical Perspective on Information Systems Evaluation,” International Journal of Agile Management Systems (2:3), Pp. 233-241.

Publisher – Google Scholar

Ballantine, J. & Stray, S. (1998). “Financial Appraisal and the IS/IT Investment Decision Making Process,” The Journal of Information Technology (13:1), Pp. 3-14.

Publisher – Google Scholar – British Library Direct

Bannister, F. & Remenyi, D. (2000). “Acts of Faith: Instinct, Value and IT Investment Decisions,” Journal of Information Technology (15), Pp.231—241.

Publisher – Google Scholar – British Library Direct

Barki, H., Rivard, S., & Talbot, J. (2001). “An Integrative Contingency Model of Project Risk Management,” Journal of Management Information Systems (17:4), Pp. 37-69.

Publisher – Google Scholar – British Library Direct

Benaroch, M. & Kauffman, R. J. (1999). “A Case for Using Real Options Pricing Analysis to Evaluate Information Technology Project Investments,” Information Systems Research (10:1), Pp. 70-86.

Publisher – Google Scholar – British Library Direct

Bernroider, E. W. N. & Volker Stix, (2006). “Profile Distance Method a Multi-Attribute Decision Making Approach For Information System Investments,” Decision Support Systems, Pp. 988— 998.

Publisher – Google Scholar

Bhatt, G. D. (2000). “An Empirical Examination of the Effects of Information Systems Integration on Business Process Improvement,” International Journal of Operations & Production Management (20:11), Pp. 1331-1359.

Publisher – Google Scholar – British Library Direct

Boonstra, A. (2003). “Structure and Analysis of IS Decision-Making Processes,” European Journal of Information Systems (12), Pp. 195-209.

Publisher – Google Scholar – British Library Direct

Bruque-Cámara, S., Vargas-Sánchez, & A. Hernandez-Ortiz, M. J. (2004).” Organizational Determinants of IT Adoption in the Pharmaceutical Distribution Sector1”, European Journal of Information Systems (13), Pp. 133—146.

Publisher – Google Scholar – British Library Direct

Campbell, J. (2002). “Real Option Analysis of The Timing of IS Investment Decisions,” Information & Management (39), Pp.337-344.

Publisher – Google Scholar

Chan, Y. E. (2000). “IT Value: The Great Divide Between Qualitative and Quantitative and Individual and Organizational Measures,” Journal of Management Information Systems (16:4), Pp. 225-26.

Publisher – Google Scholar – British Library Direct

Cronholm, S. & Goldkuhl, G. (2003). “Strategies For Information Systems Evaluation: Six Generic Types,” Electronic Journal of Information Systems Evaluation (6:2), Pp. 65-74.

Publisher – Google Scholar

Davern, M. J. & Kauffman, R. J. (2000). “Discovering Potential and Realizing Value From Information Technology Investments,” Journal of Management Information Systems (16:4), Pp. 121-143.

Publisher – Google Scholar – British Library Direct

Delone, W. H. & Mclean, E. R. (2002). “Information Systems Success Revisited,” Proceedings of the 35th Hawaii International Conference On System Sciences.

Publisher – Google Scholar – British Library Direct

Devaraj, S. & Kohli, R. (2000). “Information Technology Payoff in The Health-Care Industry: A Longitudinal Study,” Journal of Management Information Systems (16:4), Pp. 41-67.

Publisher – Google Scholar – British Library Direct

Doherty, N. F. & King, M. (2005). “From Technical to Socio-Technical Change: Tackling The Human and Organizational Aspects of Systems Development Projects,” European Journal of Information Systems (14), Pp. 1—5.

Publisher – Google Scholar

Eisenhardt, K. M. & Zbaracki, M. J. (1992). “Strategic Decision Making,” Strategic Management Journal (13), Pp. 17-37.

Publisher – Google Scholar – British Library Direct

Farbey, B., Land, F. & Targett, D. (1999). “Moving IS Evaluation Forward: Learning Themes and Research Issues,” Journal of Strategic Information Systems (8), Pp. 189-207.

Publisher – Google Scholar

Farbey, B., Land, F. & Targett, D. (1999). “The Moving Staircase: Problems of Appraisal and Evaluation in a Turbulent Environment”, Information Technology & People (12:3), Pp. 238-252.

Publisher – Google Scholar – British Library Direct

Farragher, E. J. , Kleiman, R. T. , & Sahu, A. P. (2001). “The Association Between the Use of Sophisticated Capital Budgeting Practices and Corporate Performance,” The Engineering Economist (46:4), Pp. 300-311.

Publisher – Google Scholar – British Library Direct

Gimenez, G. (2006). “Investment in New Technology: Modeling the Decision Process,” Technovation 26, Pp. 345—350.

Publisher

Goodhue, D. L. , Klein, B. D. , & March, S. T. (2000). “User Evaluations of IS as Surrogates for Objective Performance,” Information and Management (38:2), Pp. 87-101.

Publisher – Google Scholar

Hallikainena, P. & Nurmimaki, K. (2000). ‘Post-Implementation Evaluation of Information Systems: Initial Findings and Suggestions for Future Research,’ Proceedings Of IRIS 23, University of Trollhättan Uddevalla.

Google Scholar

Herath, H. S. B.& Park, C. S. (2001). “Real Options Valuation and its Relationship to Bayesian Decision Making Methods,” Engineering Economist (46:1), Pp. 1-32.

Publisher – Google Scholar – British Library Direct

Herath, H. S. B. & Park, C. S. (2002). “Multi-Stage Capital Investment Opportunities as Compound Real Options,” Engineering Economist (47:1), Pp. 1-27.

Publisher – Google Scholar – British Library Direct

Hitt, L. M. & Brynjolfsson, E. (1996). “Productivity Business Profitability, and Consumer Surplus: Three Different Measures of Information Technology Value,” MIS Quarterly (20:2), Pp. 121-141.

Publisher – Google Scholar – British Library Direct

Irani, Z., Ezingeard, J.-N. & Grieve, R. J. (1998). “Costing the True Costs of IT/IS Investments in Manufacturing: a Focus During Management Decision Making,” Logistics Information Management (11:1), Pp. 38-43.

Publisher – Google Scholar – British Library Direct

Irani, Z. & Love, P. E. D. (2000/2001). “The Propagation of Technology Management Taxonomies for Evaluating Investments in Information Systems,” Journal of Management Information Systems (17:3), Pp. 161-177.

Publisher – Google Scholar – British Library Direct

Irani, Z. & Love, P. E. D. (2002). “Developing a Frame of Reference for Ex-Ante IT/IS Investment Evaluation,” European Journal of Information Systems (11:1), Pp. 74-82.

Publisher – Google Scholar – British Library Direct

Irani, Z., Sharif, A. M. , & Love, P. E. D. (2005). “Linking Knowledge Transformation to Information Systems Evaluation,” European Journal of Information Systems (14), Pp. 213—228.

Publisher – Google Scholar – British Library Direct

Kearns, G. S. & Lederer, A. L. (2003). “The Impact of Industry Contextual Factors on IT Focus and the Use of IT for Competitive Advantage,” Information & Management (41), Pp. 899-919.

Publisher – Google Scholar

Klecun, E. & Cornford, T. (2005). “A Critical Approach to Evaluation,” European Journal f Information Systems (14), Pp. 229-243.

Publisher – Google Scholar – British Library Direct

Lin, C. & Pervan, G. (2003). “The Practice of IS/IT Benefits Management in Large Australian Organizations,” Information and Management (41:1), Pp. 13-24.

Publisher – Google Scholar

Mahmood, M. A. & Mann, G. J. (2000). “Special Issue: Impacts of Information Technology Investment on Organizational Performance,” Journal of Management Information Systems (16:4), Pp. 3-10.

Publisher – Google Scholar – British Library Direct

Maritan, C. A. (2001). “Capital Investment as Investing in Organizational Capabilities: an Empirically Grounded Process Model,” Academy of Management Journal (44:3), Pp. 513-531.

Publisher – Google Scholar – British Library Direct

Mukherji, N., Rajagopalan, B. & Tanniru, M. (2006). “ A Decision Support Model for Optimal Timing of Investments in Information Technology Upgrades,” Decision Support Systems 42, Pp.1684—1696.

Publisher – Google Scholar

Proctor, M. D. & Canada, J. R. (1992). “Past and Present Methods of Manufacturing Investment Evaluation: a Review of the Empirical and Theoretical Literature,” The Engineering Economist (38:1), Pp. 45-58.

Publisher – Google Scholar – British Library Direct

Remenyi, D. & Sherwood-Smith, M. (1999). “Maximise Information Systems Value By Continuous Participative Evaluation,” Logistics Information Management (12:1/2), Pp. 14-31.

Publisher – Google Scholar – British Library Direct

Ryan, S. D. & Harrison, D. A. (2000). “Considering Social Subsystem Costs and Benefits in Information Technology Investment Decisions,” Journal of Management Information Systems (16:4), Pp. 11-40.

Publisher – Google Scholar – British Library Direct

Sircar, S., Turnbow, J. L. & Bordoloi, B. (2000). “A Framework for Assessing the Relationship Between Information Technology Investments and Firm Performance,” Journal of Management Information Systems (16:4), Pp. 69-97.

Publisher – Google Scholar – British Library Direct