Introduction

How does the study of training effectiveness look like in most organizations? For about 80% of enterprises, this process usually ends with completing a post-training response survey, in which participants are asked by the HR department employees or a training company to assess the content of the training, the substantive preparation of the trainer, the organization of the training, etc. The opinion of participants is often the basic information material used to assess the effectiveness of the training. However, employee’s satisfaction with the course of training is definitely not a sufficient reason to justify the expenses on training and understand the benefit to the company from the employer’s perspective. Expenses on training should contribute to increasing work efficiency, improving its quality, increasing the level of achievement of business goals or building a competitive advantage of the company. Therefore, it cannot be denied that the assessment of the effectiveness of training is a necessary and even critical component of an integrated human resources management system, as it allows to determine what benefits the organization has gained and whether a given training investment was justified at all. The next question arises: Why do so many companies assess the effectiveness of training in a fragmentary way, stopping at assessing employee’s satisfaction, or does not deal with the profitability of training investments at all? Undoubtedly, measuring the effectiveness of training is the most important parameter of the training management process, but at the same time, it is the most difficult one to quantify. The models available on the market, in most cases, seem to present very universal solutions, but they do not clearly present methods of operationalization of the proposed concepts and usually have a very extensive structure. In addition, taking into account organizational restrictions (time, competence, human, financial, etc.), the systematic generation of useful information about training turns out to be a great challenge.

All managers would like the money invested in employee training to bring immediate and real benefits. However, it is not always possible to quickly determine the results of training projects (Arasanmi, 2019). Unfortunately, the organization’s common feedback in the area of training is the lack of desired effects. This is because they are rarely evaluated (Janowska, 2010). A critical aspect of the training effectiveness assessment process is the high level of complexity of this process. Among the reasons why training is so difficult to assess, the following arguments are most often raised:

- The goal of the management is to increase the work efficiency, and not necessarily to increase the knowledge of employees. Nevertheless, effectiveness of learning is the basis for training evaluation by trainers,

- Most activities focused on measuring the effectiveness of training, focus on processes related to learning rather than results (Cassidy et al., 2005, Leimbach, 2010).

- Poor work results are usually partly caused by the need for training.

- Training effects are often wasted because the skills and knowledge acquired during the training are not used at work (Loomba and Karsten, 2019).

- Only 10% of the knowledge acquired during training is used by employees, which means that the transfer of knowledge to the work environment is ineffective and uncontrolled (Latif, 2012).

- Direct business effects are difficult to analyze, and capturing direct economic translation is complicated (employee knowledge and skills can be quite accurately determined using the development center method, for example). Additionally, there are no simple tools to conclude that after conducting a given training, the company’s revenues increased e.g. by 10% (PARP, 2009).

- Obsolete methods are used to measure and evaluate training (Stolovitch, 2007), (Bates, 2004), (Griffin, 2012). Additionally, tools and expertise are not available in the organization to assess the impact of training on the organization’s effectiveness.

- Literature on measuring efficiency is “extensive, non-empirical, not theoretical, poorly written and boring. Moreover, it is characterized by constant discussion and very few empirical examples” (Skylar et al., 2010).

- It is a complicated, difficult process and often depends on a long-term perspective to which many managers have no patience (Griffin, 2012).

Among those involved in training, opinions are often heard that training evaluation is difficult, time-consuming, complicated and, consequently, expensive. R. Griffin (2012) describes training evaluation as: “one of the most difficult tasks of training specialists”. However, despite various restrictions, companies are forced to analyze the effects of training for management purposes.

Literature Review

There are many models available on the market. The solution that is the starting point for most is the D. Kirkpatrick model, which assumes the assessment of training at four levels: reaction, learning, application (behavior) and business effects. The J. J. Phillips ROI model completes the D. Kirkpatrick model with a fifth level assessment: estimating return on investment in training (ROI) – measuring profitability. In general, the overwhelming majority of models use Kirkpatrick solutions. An overview of the methods available to measure training effectiveness is provided below:

– The four-level D. Kirkpatrick model – the most popular method of assessing training effectiveness, developed in the late 1950s, includes four levels of assessment:

(1) level of response – at this stage, participants are asked if they were satisfied with training, training methods, trainer, housing conditions, etc.,

(2) level of learning – at this stage, an appropriate knowledge test is usually used to determine whether the training has resulted in retention of training content,

(3) level of behavior – at this stage, the degree to which the effects of training are transferred to the work environment is determined,

(4) level of effects – at this stage, changes in business results as a result of training should be demonstrated, and the benefits of training should be estimated, e.g. absence, staff turnover, efficiency gains and cost reduction (Kirkpatrick, 2001).

– The five-level ROI model of J. J. Phillips – J. Phillips’s ROI methodology is based on a five-level model. In general, Phillips supplemented the D. Kirkpatrick model with a fifth level, which is the return on investment (ROI), i.e. the question of whether the monetary value of the results exceeds the cost of the training program. To calculate ROI, the results from the fourth level have to be converted into monetary values and compared with the costs of the program, taking into account all components of costs and benefits. So, in a sense, it is a combination of the Kirkpatrick model and BCA (benefit-cost analysis). As part of the J. J. Philips model, two basic indicators are calculated: ROI (Return on Investment) and BCR (Benefits/Costs Ratio). These indicators allow to answer the question about how much money the company will gain in exchange for the expenses that it incurred for a given training project or other projects in the area of personnel management. The ROI method obviously has pros and cons. The single numerical value obtained thanks to it combines all the basic “components” of profitability and can be used to make comparisons with other investment possibilities. However, ROI has several major disadvantages. A difficult element here is isolating the impact of training from the effects of other factors on the company’s results. Model critics also point at its complexity and the fact that it is mainly used by large companies (Phillips et al., 2009; Steensma and Groeneveld, 2010).

– Model Leslie Rea – the author systematically presents the next stages of training, from identifying the needs of the company, defining the objectives of the training and designing the appropriate program, to the preparation of individual training sessions and estimating the effects of training. The author presents many techniques and methods along with procedures for conducting trainings and training sessions (Rae, 2006).

-A model for assessing the knowledge and skills of Marshall and Schriver – this is a five-stage model in which knowledge and skills are assessed separately. At level one, the opinions and feelings of training participants are examined. On the second level, written tests checking the knowledge of trainees are employed. At level three, the employee performs standard tasks to verify the level of the acquired knowledge and skills. The fourth level concerns the transfer of skills, and the fifth – the impact of training on the economic results of the form and return on training investment (Phillips et al., 2009).

– Six sigma – a method of managing the list at Motorola in the mid-1980s by B. Galvin and B. Smith. In statistics, sigma means the standard deviation of the variable. Six sigma means the distance between the set of deviations of the measure of central tendency (e.g., arithmetic mean for normal operations) The Six Sigma method was used for the first time in a production plant. Now it is increasingly expanding to such areas as e.g. sales and HR. Six Sigma is not only a technique, but also a philosophy (Pandey, 2007; DeFeo, 2000). Six Sigma means an organization’s way of thinking in which people make decisions based on data, look for the root causes of problems, try to control changes, track leading indicators of problems to allow preventive measures. (Fleming, 2005).

The literature on the subject also provides other solutions that recommend, in addition to testing the level of satisfaction of training participants, several other contexts/levels of training assessment. At the same time, many studies confirm the low level of implementation in the practice of assessments at higher levels. Therefore, the question arises: what factors influence the low level of application of available training effectiveness testing models? The rest of the work will present those imperfections in the training management process (methodical and operational), that are most closely observed in training practice.

Methodological dysfunctions (on the example of the Kirkpatrick and Philips model – ROI)

- The hegemony of the Kirkpatrick model occurs despite the fear of its actual effectiveness – for example, the degree to which the results of one level are correlated with the results of later levels is doubtful (Berge, 2008). The results of studies carried out by G. Alliger and E. Janak (1989) show a very low correlation between reaction and learning at r = 0.07, and a correlation between reaction and behavior at r = 0.05. A slightly higher correlation was found between learning and behavior (r = 0.13); learning and effects (r = 0.40), and behavior and results (r = 0.19).

- The D. Kirkpatrick model does not provide specific solutions for use by organizations at every stage of the training program evaluation, for this reason, many organizations do not use the whole model, focusing only on the first two stages. To put it simply, the model lacks practical guidelines on how to develop results at individual stages (how to generate, process and analyze data).

- The relationship between training and long-term results is unfortunately often blurred. Additionally, long-term effects have many causes, and training can be just one of them.

- Although the logic of the ROI method seems uncomplicated, the assessment of the elements included in the method (inflow of returns obtained from a given investment, outflow of resources balancing these returns to carry out a given investment, course of inflow of returns and outflow of resources in each of the future periods) is quite subjective (Boudreau and Casio, 2007).

- Typical ROI calculations allow you to focus on only one investment in human resources and do not allow you to consider how these types of investments interact with each other as an investment portfolio. Training can, for example, lead to a value creation that outweighs the cost of conducting it, but wouldn’t this value be even higher if combined with relevant investments in individual incentive programs correlated with training results?

- In practice, the objective determination of ROI is difficult and complicated, and the assessment is totally time dependent. If the ROI model includes the collection of post-training data, the effects of the training on which the data were collected should be extracted. This “cleaned” data must then be converted into monetary values in order to calculate the return on investment and identify specific benefits. It is also important to assign a value to the costs of a given training program, as this also affects the final return on investment. In fact, when it comes to trying to measure ROI, the calculations are very complex. In addition, to correctly determine the ROI of each part of the training process, a baseline should be determined so that you can try to measure progress/development/improvement (Little, 2014).

- The clear definition of ROI is quite problematic, as it always requires a reference to hard indicators; the problem of isolating training effects also arises.

These dysfunctions should be supplemented with an opinion on the currently fashionable six sigma method in HR applications. According to W. Cascio and J. Boudreau, one of the basic limitations of this measure is the fact that in reality, it is not a measure that can be transferred to HR areas. This is a performance indicator that can be used to monitor overhead costs for almost any job. As a result, talent focus is not included when emphasizing performance. Paying special attention to reducing costs usually leads to the rejection of “more expensive” decision-making options, which may be often more valuable. Thus, although this measure seems attractive financially, it cannot be used to reflect the value of talent (Cascio and Boudreasu, 2011) and the added value of investment in training.

Operational Dysfunctions

- Kirkpatrick and J. J. Phillips’s models are a multi-stage evaluation of training programs. The detailed analysis means that the assessment requires both a certain amount of time and the acquisition of skills in using the assessment scheme. In other words, the models referred to require a high level of HR department involvement and line managers at the stage of planning, implementation and assessment of training, which means that their application value is perceived as doubtful. Moreover, too much labor-intensive use of models reduces the effectiveness of potential benefits from their use, which is why in practice the evaluation is often performed fragmentarily.

- Measuring the effects of training at level four is very complicated, even in cases where organizations have clearly indicated which long-term goals should be achieved. It is difficult to provide convincing evidence of the causal relationship between the training effect and the desired effect. Therefore, level four is often overlooked.

- A deferred assessment of the effects of a single training, without the adopted reference object, often results in the loss of objectiveness of such assessment and its exaggeration.

- Along with the popularization of the D. Kirkpatrick model, satisfaction questionnaires /surveys of training participants, by whom the program is evaluated, the manner of conducting training and the conditions in which it took place, became widely used. An important limitation of this tool (surveys measuring the response to training) is often the faulty design of questions in the survey (excess of open questions, too many questions regarding organizational and social issues during the training), as well as the way employees approach it, which is not fully specified (e.g. high assessment does not require further explanation or tendency to transfer non-substantive assessments to assess the usefulness of training, etc.). Naturally, employees’ opinions can be taken into account in organizing subsequent training projects, but they are difficult to be considered as a sufficient basis for assessing the value of training (Andrzejczak, 2010).

- The survey (the basic tool used in stages I-III) does not provide measurable information, as most people tend to average or overstate the ratings even when they are anonymous. The survey rating can also be misleading, among others, if the last part of the training was spectacular and effective, because it is this element of the training that is usually taken into account by the assessors (training participants).

- The expectations of training participants are emphasized too strongly in the model, which is formulated as if the training was to meet only the expectations of the participant as the sole beneficiaries of the training.

- The model does not solve the problem of the low level of employees’ responsibility for training results and their own professional development.

- The determination of the measurement effects of training programs is often unrealistic because of the difficulties in finding the right control group and the need for a large commitment of resources. As a result, it is often unclear whether training was needed at all (Demerouti et al., 2011). It is recommended that control group assessments are included in the models, which strongly involves all the stakeholders of the training. As a consequence, a large amount of materials for analysis leads to a reduction of the most important stages of assessment due to system failure. It is also worth mentioning that in practice, it is rarely possible to carry out the same observations and tests involving control groups several times (Bramley, 2001).

- Another important problem in obtaining important measurement data is the usual reluctance to disclose issues regarding knowledge of a foreign language, real skills in operating IT and operating systems, technological skills, qualifications etc. The fact is, however, it is not enough just to watch people and talk to them to assess their learning experience, or to determine that this type of activity is consistent with the company’s goals.

- Activities that lead to employees’ development in terms of such abilities as soft skills, are difficult to translate into monetary values. Managers really do not know what is important to measure, how to collect and analyze data and synthesize the data obtained (Abernathy, 1999).

- The problem is the lack of proper and reliable diagnosis of training needs – very often the training becomes a form of “benefit” for employees, or an element of personnel marketing, rather than satisfying actual and strategically important training needs of the organization. Employees report their own training needs, based on their often-subjective feelings, which are aggregated throughout the organization. As a result, the company collects training “whims” rather than needs (Sienkiewicz, 2010).

- The top management is responsible for the relevance of the training since the expenditure for training is an investment. However, in practice, even in small companies, tasks related to the organization of the training process are delegated to employees who quite often do not have the appropriate competence to organize them.

It is surely worth mentioning that there are many other reasons, as a result of which, training expenses are wasted, such as unclear expectations of the management, little support in the workplace, no monitoring of post-training effects, resource shortages in HR departments and competence deficits to implement new skills, no incentives to apply new skills and knowledge, and training discomfort related to organizational change. To sum up, the training process should also be supported by activities that take into account environmental factors, such as appropriate information mechanisms on the results achieved and employees’ incentives (Berge, 2008).

MTE Model

This tool has been tested in organizational conditions in a government administration unit. The testing procedure consisted of comparing the effects of two systems of training management procedures, the procedure based on the D. Kirkpatrick model and the MTE model. It is worth adding that the overall concept of the model has been scientifically verified and has received positive implementation recommendations (Ziebacz and Tychmanska, 2016). The big challenge for implementing the tool was to develop a plan that would allow to minimize the disruption of the workflow caused by the MTE testing.

The basic assumption, at the stage of model development, was to lower labor, cost consumption, and complexity of the process of measuring training effectiveness compared to the methods and systems available on the market. Another important assumption was to increase employees’ direct responsibility for effectiveness in the area of professional development. The issue distinguishes the presented model as the measurement of effectiveness does not refer only to a single training, but to the entire portfolio of trainings implemented in the organization. The model assumes that measuring the effectiveness of a single training is inefficient, and often impossible in practice. Attempts to measure the effectiveness of a single training require a large commitment of resources (human, financial, organizational), as a result, the boundary between the effectiveness of training activities is often lost, and the actions taken are often superficial (surveys, knowledge studies) which are not sufficient in the process of measuring training effectiveness. For this reason, it seems more reasonable to analyze aggregated training results and the impact of all the training activities on significant organizational variables, as well as to analyze the trend of these results. In other words, the MTE model examines the ratio of the effectiveness of the entire training policy in a company to the value of strictly business indicators. This approach, first of all, improves the measurement process, secondly, makes it more effective, thirdly – makes all bills more meaningful, and fourthly, allows you to capture trends in the development of indicators and their relationships. The problem of isolating training effects can be more effectively verified (estimated) on a macro scale than on a micro scale. When conducting analysis on aggregate values, the measurement of training effectiveness should be replaced with the term – estimation of training effectiveness. The model is presented in Figure 1.

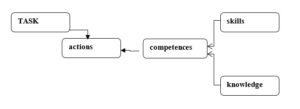

Fig. 1: The MTE method

Fig. 1: The MTE method

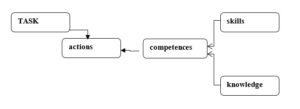

In most of the models for training effectiveness, recognition of training needs is the first stage of the process, and the starting point for its initiation is the observed problem diagnosed in the organization (lack of knowledge, skills, qualifications). In the “MTE” model, it is assumed that the starting point – the information object, constituting the reference point for identifying training needs is the TASK.

Fig. 2: The structure of information objects used at the stage of identifying the training needs of the “MTE” model.

The structure of information objects in the model results from the assumption that “training” is of a short-term nature – this is not a so-called school education (from scratch). It is assumed that the employee has a certain base of knowledge and skills as well as professional experience that allows him to actively participate in the planning of the development. During the training, the employee can obtain instructions focused on the assimilation of a specific activity.

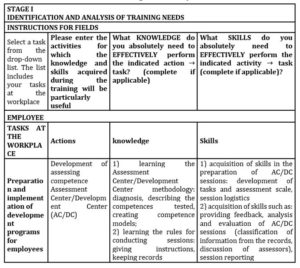

Stage I: Identification and Analysis of Training Needs.

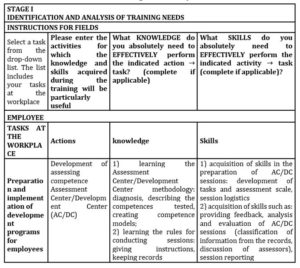

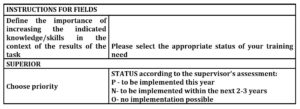

The entry in the electronic sheet of the individual record of training needs begins with the selection of tasks from the scope of duties in the workplace and a description of the activities that will, according to the employee, run more efficiently, as a result of acquiring specific new skills or specific knowledge. Therefore, training needs are defined in two forms:

- as skills – the ability to apply knowledge to perform tasks and solve problems,

- as an area of knowledge – a set of justified judgments (descriptions of facts, theories and principles of conduct) resulting from the human cognitive activity (National Qualifications System as a tool for lifelong learning, Educational Research Institute). An example was prepared for an employee of the Human Resources Department, Training, Recruitment and Development Section.

The training need has been identified in relation to the job task: “Preparation and implementation of development programs for employees”.

Table 2: Template of the form for entering data into the database/form-

Training needs study sheet

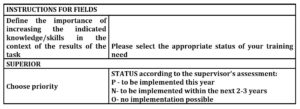

The method of collecting information on training needs described above is based on the following assumptions:

- the employee has the best potential to self-diagnose the competence gaps hindering achieving the planned results of actions,

- the employee may describe the competence gaps he/she observes in such a way as to assess the level of filling these gaps as part of the training at the stage of training assessment,

- the employee to whom the training initiative belongs is active in the assimilation of the training objectives he or she has articulated.

The following analysis is needed for training, and the result is the output structure below.

Table 3: Template of the form for entering data into the database/form-Training needs study sheet

Stage II: Specification of the development service order and implementation of the training

Information on the status of entries made by employees regarding their training needs – is available on an ongoing basis, with the option of supplementing when new tasks appear and, as a consequence, new training needs. The employee receives feedback on the opportunities for the development of the skills and knowledge specified by him under the programs offered by the employer.

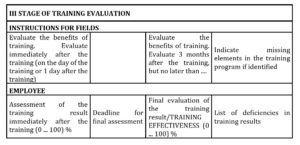

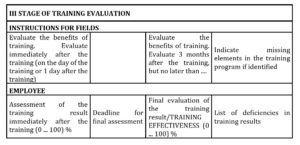

Stage III: Assessment of the implementation of the training service

The employee evaluates the effectiveness of the training service provided twice:

- Immediately after the training (using the freshness effect: comparing the “ordered” training results with the results obtained through the training),

- After 3 months from the end of the training, during which the employee should closely monitor his or her proficiency in the knowledge and skills acquired during the training in the context of the task being carried out.

In a situation where the assessment of training effectiveness is lower than 90%, the employee fills in the electronic sheet with the field: “list of deficiencies in the training results”, entering the identified elements/aspects of the training that were covered by the program (order) and which were not implemented

Table 4: Sheet for recording individual training needs

Stage IV: Calculation of costs and benefits of training programs

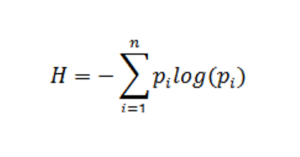

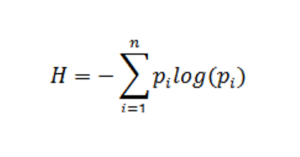

The benefits and costs of training programs are not assessed at the level of a single training. The effectiveness of the implemented training policy is assessed in the context of organizational efficiency and effectiveness. The interdependencies and dynamics of changes in training cost-time indicators and general organizational indicators will be analyzed. Information entropy can be used to study these changes as a measure of information. According to E. Kolbusz, in the field of management, and especially when solving specific management problems, it is necessary to operate with a synthetic definition of qualitative information features, so that each configuration of the listed features falls within the definition of this term (Kolbusz, 1993). In an information system, specific information is useful if it is used to solve a particular management problem, or more precisely, when it is an important condition for solving a given management problem (Unold, 2004). Mathematically, entropy can be expressed using the following formula (Mitzenmacher, 2009):

Formula 1.

where:

H- entropy

pi – probability specified on set {1, …, n}

This formula expresses, according to the formulation of C. Shannon himself, the entropy of the probability distribution (p1, p2, p3 … ..pn), specified on the set {1, …, n}. It defines the amount of information in terms of value (choice, indeterminacy). From this formula, it follows that H = 0 if and only if one of the probabilities equals one, i.e. when all other probabilities are zero. This is a state of certainty. The statement that from a set of events that may occur with a certain probability, one actually occurred brings uncertainty to zero, which according to Shannon’s formulation, qualifies H as a reasonable quantitative measure of choice or measure of information (Mitzenmacher and Upfal, 2009).

The goal of management is to ensure the development stability of the enterprise by skillfully controlling its functioning in the environment so that it maintains its separateness on the one hand, and maintains an optimal level of exchange with the environment on the other. Energy resources (understood as competences) embodied in the employees who make up the organization must be regulated in such a way that no excessive clusters of them are created anywhere that can “disrupt” it from the inside (excessive concentration of similar competences). Therefore, the organization has energy resources enabling its current functioning and development as well as effective process management, also in adverse conditions. From this perspective, management is the implementation of compensatory processes – to achieve functional balance.

Conclusion

The issue of managing the effectiveness of the training process in contemporary, modern organizations operating on the market, forces widely understood changes, where individualization of the approach becomes a necessity. Individualization is understood as the implementation of tailor-made systems. It should also be accepted that testing the effectiveness of training is a continuous and long-term process. Moreover, the lasting results of investment in training are visible only after a long time, and the results of such activities can also be typically non-material. It is therefore difficult to express them in a quantified form. The main problem is therefore to clearly define the extent to which the training has contributed to the observed changes. The greater achievement is showing the trend of change. In this context, developing a method for the efficient management of the training process in the organization, including the process of assessing the effectiveness of training, is a key challenge for most organizations.

In the proposed model, training effectiveness is understood as the effectiveness of the training policy. The assessment of the effectiveness applies to the time period – the period of time analyzed (e.g. year) and not a single training, which gives a more favorable perspective on the analysis of the effects/benefits of training processes for organizational needs. A significant difference, that distinguishes the MTE method from other methods available on the market, concerns the study of training needs. The model replaces the information object proposed by various authors: “problem” with the object “task” – “task element – activity”. From the point of view of the usefulness/value of the information obtained, it is better to start the training evaluation process not with “defining the problem”, but with “the description of the activity/task, which according to the employee will run more efficiently after the acquisition of specific skills and knowledge.” In accordance with the model assumptions, this structural change (shifting the emphasis in the process of identifying training needs and in the process of assessing training results to the tasks of employees) should promote the effectiveness of training. An equally important element of the method is the way in which data on the training needs of employees are collected. This helps to build the responsibility of trainees for the results (learning outcomes) obtained in the training process. The MTE model allows to increase the responsibility of trained employees by defining their needs and creating opportunities to verify the quality of training (comparison of expectations for acquiring skills and verification of their mastery by the manager). This is an important transition from passive participation to active mastering of the effects of training. The goal is the responsible participation of the employees in the planning and evaluation of training results. Another aspect of this approach is to eliminate the negative connotation associated with the word “problem”, the use of which can inhibit the motivation to actively participate in the training process, as well as to link the results (learning outcomes) with specific occupational tasks and their components (activities). The process of collecting information in MTE provides synthetic information for the specification of the subject training order, which significantly facilitates the work process of HR cells in determining training needs when choosing training companies, and also reduces the time needed to organize training. It also provides the basis for assessing the level of implementation of ordered results (learning outcomes) of a given training process. Therefore, it is possible to evaluate the implementation of the training policy and relate its effects to company-wide indicators.

(adsbygoogle = window.adsbygoogle || []).push({});

References

- Abernathy, D.J. (1999), ‘Thinking outside the evaluation box’, Training & Development, Vol. 53, February, 18-23.

- Alliger G. and Janak E. (1989), ‘Kirkpatrick’s Levels of Training Criteria: Thirty Years Later’, Personnel Psychology, vol. 42, 331-342.

- Andrzejczak A. (2010), Projektowanie i realizacja szkoleń, PWE, Warszawa.

- Arasanmi C.N. (2019), ‘Training effectiveness in an enterprise resource planning system environment’, European Journal of Training and Development, Vol. 43 No. 5/6, 456-469.

- Bates, R. (2004), ‘A critical analysis of evaluation practice: the Kirkpatrick model and the principle of beneficence’, Evaluation and Program Planning, Vol. 27 No. 3, 341-7.

- Berge Z.L. (2008), ‘Why it is so hard to evaluate training in the workplace’, Industrial and Commercial Training, Vol. 40 Iss 7, 390 – 395.

- Boudreau J. and Cascio W.F.(2011), ‘Inwestowanie w ludzi. Wpływ inicjatyw z zakresu ZZL na wyniki finansowe przedsiębiorstwa’, Wydawnictwo Wolters Kluwer Polska, Warszawa.

- Bramley P. (2001), Ocena efektywności szkoleń, Oficyna Ekonomiczna, Kraków.

- DeFeo, J.A. (2000), ‘Six sigma: new opportunities for HR, new career growth for employees’, Employment Relations Today, Vol. 27 No. 2, 1-7.

- Demerouti E., Eeuwijk E., Snelder M. and Wild U.(2011), ‘Assessing the effects of a “personal effectiveness” training on psychological capital, assertiveness and self-awareness using selfother agreement’, Career Development International, Vol. 16 Iss 1, 60 – 81.

- Cassidy, M., Gory, H. and Stoble, E. (2005), ‘Knowledge accumulation and productivity: evidence from plant level data for Ireland’, Scottish Journal of Political Economy, Vol. 52 No. 3, 344-59.

- Fleming, J.H., Coffman, C. and Harter, J.K. (2005), ‘Manage your human Sigma’, Harvard Business Review, July-August, 106-14.

- Griffin R. (2012), ‘A practitioner friendly and scientifically robust training evaluation approach’, Journal of Workplace Learning, Vol. 24 Iss 6, 393 – 402.

- Janowska Z. (2010), Zarządzanie zasobami ludzkimi. Wydanie II zmienione. PWE, Warszawa.

- Kirkpatrick D. (2001), Pomiar efektywności szkoleń, Studio Emka, Warszawa.

- Kolbusz E. (1993), Analiza potrzeb informacyjnych przedsiębiorstw. Podstawy metodologiczne. Uniwersytet Szczeciński , Szczecin.

- Latif K. (2012), ‘An integrated model of training effectiveness and satisfaction with employee development interventions’, Industrial and Commercial Training, Vol. 44 Iss 4, 211 – 222.

- Leimbach, M. (2010), ‘Learning transfer model: a research driven approach to enhancing learning effectiveness’, Industrial and Commercial Training, Vol. 42 No. 2, 81-6.

- Little B. (2014), ‘Best practices to ensure the maximum ROI in learning and development’, Industrial and Commercial Training, Vol. 46 Iss 7,400 – 405.

- Loomba A. and Karsten R. (2019), ‘Self-efficacy’s role in success of quality training programmes’, Industrial and Commercial Training, vol. 51 No. 1, 24-39.

- Mitzenmacher M and Upfal E. (2009), Metody probabilistyczne i obliczenia. Wydawnictwo Naukowo-Techniczne, Warszawa.

- Pandey A. (2007), ‘Strategically focused training in Six Sigma way: a case study’, Journal of European Industrial Training, Vol. 31 Iss 2, 145 – 162.

- Phillips P.P., Phillips J.J., Stone R.D. and Burkett H. (2009), Zwrot z inwestycji w szkolenia i rozwój pracowników, Wolters Kluwer, Kraków.

- Rae L. (2006), Efektywne szkolenie. Techniki doskonalenia umiejętności trenerskich, Oficyna Ekonomiczna, Kraków.

- Sienkiewicz Ł. (2010), ‘Rola szkoleń i rozwoju w budowaniu innowacyjności pracowników i przedsiębiorstwa, [in:] Rola ZZL w kreowaniu innowacyjności organizacji, Borkowska S. (red.), Wydawnictwo C.H. Beck, Warszawa.

- Skylar K. and Serkan Yalcin P. (2010), ‘Managerial training effectiveness’, Personnel Review, Vol. 39 Iss 2, 227 – 241.

- Steensma H.,and Groeneveld K. (2010), ‘Evaluating a training using the “four levels model’, Journal of Workplace Learning, Vol. 22 Iss 5, 319 – 331.

- Stolovitch H.D. (2007), ‘The story of training transfer’, Talent Management Magazine, [Online] [Retrieved, May 28, 2019], www.talentmgt.com/columnists/human-peformance/2007/September/419/index.php

- Unold J. (2004), System informacyjny a jakościowe ujęcie informacji, Akademia Ekonomiczna we Wrocławiu, Wroclaw.

- Krajowy System Kwalifikacji jako narzędzie uczenia się przez całe życie”, ( 2014), Instytut Badań Edukacyjnych, [Online], [Retrieved December 12, 2016], http://biblioteka krk.ibe.edu.pl/opac_css/doc_num.php?explnum_id=655 9.

- Ziębacz I. and Tychmanska A, (2016) research: developing a method for measuring the effectiveness of training at the Polish Agency for Enterprise Development, Warsaw.