Introduction

Clinical decision rules (CPRs) are tools designed to assist medical decision-making and derived from an original piece of research integrating three or more variables from history, clinical signs or routine examinations [1-4]. CPRs are intended for use by clinicians when caring for patients to help them to make diagnostic and/or therapeutic bedside decisions [2]. These tools are particularly recommended when medical decisions could be uncertain and unclear [3]. They are usually created by multivariate analysis and either provide a probability of disease or outcome, or suggest a diagnostic or therapeutic course of action [2]. A typical example of CPR is the Ottawa rule, which helps the clinician to prescribe an ankle X-ray to rule in or out a fracture after an injury [5].

Because these rules are used to make decisions about patient care, they have to be well developed and validated using high quality methodological standards [2]. In 1985, Wasson et al. published methodological criteria for the evaluation of CPRs [1]. These criteria were modified by Laupacis et al. in 1997, as part of a review of the quality of recently published CPRs in adult medicine [2]. Then, in 2000, McGinn et al proposed guidelines on behalf of the Evidence-Based-Medicine Working Group for the development of CPRs based on these criteria [3].

CPRs have been developed in the field of pediatrics since the 1990s. A recent methodological review from Maguire et al. [6] on a large database of CPRs (n=137) established that their methodological quality is of value [6]. However, no comparison has been made between those of adulthood, which are widely known to be of high quality. Thus, the purpose of the present study is to review the methodological quality using the standards of the Evidence-Based-Medicine Working Group on a set of published CPRs in field of pediatrics and to compare it with adult CPR methodological quality.

Methods

Design

We conducted a methodological review on a set of pediatric CPRs recently published, using the Evidence-Based Medicine methodological standards [2]. Even if our study was a methodological review, and not a systematic review, there were common points on its design with systematic reviews (e.g. inclusion strategy, data extraction, etc.) for which PRISMA guideline was followed [7].

Data Source

Articles were identified using hand-searching in table of contents in a set of the major general and pediatric journals published in 2006, based on a set of previously collected pediatric rules [8]. We used manual rather than electronic database searching, even if it was more time-consuming because CPRs were currently not well indexed in electronic database leading to a non-exhaustive search. Generalist and pediatric journals were chosen based on their impact factor, and were the following: ‘The New England Journal of Medicine’, ‘The Lancet ‘, ‘British Medical Journal’, and ‘Journal of American Medical Association’ for the general journals, and ‘Pediatrics’, ‘The Journal of Pediatrics’, ‘Archives of Disease in Childhood’, and ‘Archives of Disease in Adolescent Medicine’ for the pediatric ones. One reviewer screened the titles and abstracts from the search against the inclusion and exclusion criteria, and considered for inclusion reports of CPRs. In case of insufficient information to make a decision, the full article was read and eventually discussed with a second reviewer until a consensus was obtained.

Study Eligibility

All articles reporting a CPR derivation were included. A CPR was defined as a tool designed to assist medical decision, derived for clinicians who are taking care of patients; this tool had to combine three or more variables coming from history, clinical examination and/or routine biological/imaging examinations [2]. Pediatric patients were defined as children younger than 18-years-old. A CPR had to provide a probability of an outcome or to suggest a diagnostic or therapeutic course of action. Final decision to include eligible papers was reached by reading the full-text review.

Data Extraction

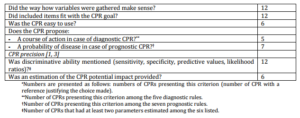

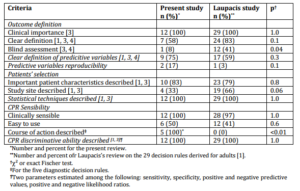

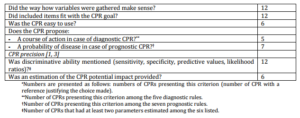

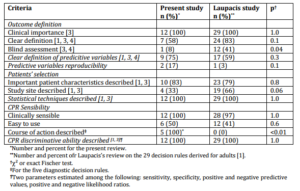

One reviewer abstracted data from the full-text in each study to obtain information on year and journal of publication, the type and country of study, the number of patients reported, the clinical question and need for deriving a CPR and all the items identified by the Evidence-Based Medicine Working Group [2]. The datasheet for methodological quality extraction was based on the list proposed by Stiell et al. [3],with some added precisions when reading the articles of Wasson, Laupacis, and Concato articles [1, 4, 9]. The detailed list of methodological items was shown in Table 1. One reviewer extracted the data in a standardized electronic sheet. Any uncertainties were discussed with a second reviewer to obtain a consensus. Where necessary, authors were contacted for data or to clarify information.

Table 1. Methodological Quality of CPR Derivation

Analysis

We first described general characteristics of the CPRs included. Second, the methodological quality was described and compared with the items proposed in adult CPRs in Laupacis previously published review [1].

Results

Studies’ Characteristics

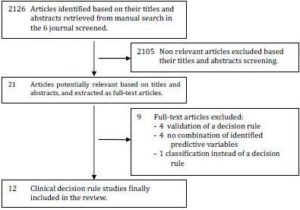

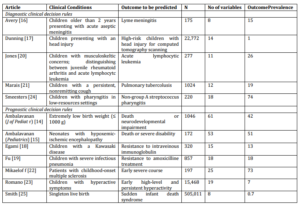

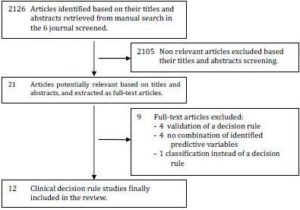

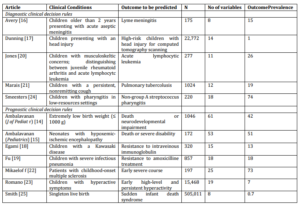

Manual search identified 21 potentially relevant articles from the 2126 original articles published in 2006 in the 8 journals screened (Fig 1). From the 860 original articles published in general journals (ie. ‘The New England Journal of Medicine’,’ JAMA’, ‘BMJ’, and ‘The Lancet’), 51 articles (6%) were pediatric studies, and we only retrieved 4 CPRs (0.5%), none of which were pediatric CPRs. All potentially eligible articles were from pediatric journal. Review of the full text of the 21 potentially eligible studies revealed 12 studies [10-21] that fulfilled all inclusion criteria (Fig 1). Nine were from Pediatrics [11, 12, 15-21], two from ‘The Journal of Pediatrics’ [10, 14] and the last one from ‘The Archives of Disease in Childhood’ [13]. Five CPRs were diagnostic ones, the other seven were prognostic [10, 11, 14, 15, 18, 19, 21]. The clinical issues that CPRs dealt with were shown in table 1. The median number of children enrolled for the derivation of each CPR was 589 (range: 172-505,011; Table 2). The median number of predictors assessed for possible inclusion was 16.5 (range: 8-61). The median prevalence of the outcome predicted by the rule was 18.5% (range: 0.7 [sudden death syndrome]-74 [non group-A streptococcus pharyngitis]).

Figure 1: Flow-Chart for the Inclusion of Cprs for Health Conditions of Childhood

Table 2. Description of the Clinical Decision Rules

Four studies were prospective and especially designed for CPR derivation [13, 15, 17, 20], three were secondary analysis of prospective randomized control trials [10, 11, 19], and five studies were retrospective [12, 14, 16, 18, 21]. Eight articles were multicentre cohort studies [10, 11, 13, 15, 17-20], two were single centre cohort studies [12, 14], one article was a population-based study [21], and the last article was a case-control study [16].

Assessment of Methodological Quality of Cprs

The methodological quality of the included CPRs is detailed in table 1. All rules seemed of clinical importance for both a diagnostic or prognostic purpose. On one hand, corresponding clinical conditions were frequent, with references and pre-existing studies in most of the cases (92%) [10-13, 15-21]. On the other hand, clinicians were convinced that clinical and/or routine examination would be helpful to predict the outcome in 83% of the situations [10-15, 17, 19-21], as requested to define an relevant clinical condition for deriving CPRs.

All outcomes were found to be of clinical importance, and 72% of them were justified with bibliographic references [11-13, 15, 17-21]. Fifty-eight percent of outcomes had clear definition [10-15, 17-21], but they were rarely supported by references (33% – [10, 14, 18, 21]) and blind assessment was rare (8% – [11]). Predictive variables were clearly defined in 92% of the cases [11-21], but with a weak bibliographic references support (17% – [15, 19]). Only one study did not provide predictive variables measurement in a sufficient patients’ proportion [21]. Evaluation of reproducibility of predictive variables was weak: a kappa coefficient (in case of qualitative variables [22]) or an intra-class coefficient (in case of quantitative variables [23]) to evaluate between-observer variability were calculated in two studies [13, 15]. None of the studies estimated within-observer reproducibility (variability of the same observer through different examinations). Inclusion criteria, patients’ selection process, and patients’ general characteristics were well described in most of cases (83 to 100%). The sample size was a priori defined and justified by taking into account the risk of model over-fitting in only one study [13]. Statistical methods used varied according to the studies, but were always explained and adequately supported. Methods often combined univariate with multivariate analyses (67% – [13-15, 17-21]). The latter were based on logistic-regression models (67% – [10-12, 14, 15, 19-21]), Cox models in case of longitudinal data (8% – [18]), or classification regression trees techniques (25% – [10, 13, 17]). CPRs appeared to be “clinically sensible”, providing either a course of actions that should be made in case of diagnostic CPRs, or an event probability in case of prognostic CPRs. Half of CPR needed a calculator to be applied [12, 14, 18-22]. The discriminative power (with at least two parameters among the six following: sensitivity, specificity, positive or negative predictive values, positive or negative likelihood ratios) were estimated in all CPRs. However, the potential impact for using the rule (in terms of useless examinations avoided treatments given, cost-effectiveness, etc) was discussed in half of CPRs [13, 14, 17, 19-21].

Comparison of Our Review with Laupacis Results for Adult CPR

Methodological quality of CPRs derivation in children was compared with the one in adulthood, evaluated by Laupacis et al. on 29 CPRs derived for adults [1] (table 3). Laupacis et al. performed a review of methodological quality of CPR for adults published in general journal (Annals of Internal Medicine, The Lancet, BMJ, JAMA) between 1991 and 1994. The authors included 29 CPRs, including 25 CPR derivations. Methodological quality did not significantly differ between pediatric (our review) and adult (Laupacis review [1]) CPRs for most of the items. However, pediatric CPRs more often presented with the following weaknesses compared to adult CPRs: blind assessments were fewer (8% vs. 41%; p=0.04) and study site description was more often missing (33% vs. 6%; p=0.06). Conversely, pediatric diagnostic CPRs always provided a course of action contrary to adult CPRs (100% vs. 0%; p<0.01).

Table 3. Comparison of Methodological Quality of CPR Derivation between the Present Review and the Review Performed by Laupacis et al. for CPR in Adulthood [1]

Discussion

We performed a review of the methodological quality of pediatric CPRs, and compared it with adult CPRs quality previously reviewed by Laupacis et al. [1]. All included CPRs were published in pediatric journal, despite their good methodological quality for most of them. Pediatrics CPRs fulfilled the following methodological items: (i) they were derived for an adequate clinical condition where CPRs would be desirable, (ii) predictive and outcomes variables were usually well defined and (iii) inclusion criteria were clearly established and stated, allowing readers to evaluate if CPRs results could be applied on its setting. However, we found some weaknesses in CPRs derivations. First, outcome blindness assessment was rare leading to a potential overestimation of CPR discriminative ability [24, 25]. Second, inter-observer reproducibility was never evaluated. This could affect CPR robustness, especially if CPR was based on many so-called “soft” variables (i.e. derived from clinical examination that can vary between physicians because they are hard to precisely define and evaluate in the same manner) [1, 3]. Third, sample size was very rarely a priori calculated, leading to potential wide confidence intervals of parameters estimating the discriminative ability. Lastly, we can regret that only half of CPRs were easy to use, meaning at patients’ bedside and without any calculator, and half of the studies provided estimation of potential impact of CPR use.

Compared with the methodological quality of adults CPRs reviewed by Laupacis et al. [1], we found that pediatric CPRs quality did not significantly differ for 9 out of 12 items. They presented with less blind assessment than adult CPRs, confirming our first results on this failing point. Pediatric CPRs also did not mention the study site as often as adult CPRs did, which could impact the transportability when readers would like to evaluate how similar their setting was as compared to the one of the study. Lastly, and surprisingly, pediatric CPRs provided more often the course of actions that should be taken compare to the adult CPRs. This could partly be explained by the fact that CPRs reviewed by Laupacis et al. were derived more than ten years before CPRs we reviewed. Improvements in CPR derivation might explain this point. The reviewed CPRs were typically prediction rules: they were derived based on scores or risk-stratification algorithms to provide diagnostic or prognostic probabilities, and aimed to assist clinicians improving their clinical decisions. In our review, pediatrics CPRs provided more often course of action rather than simple outcome probabilities and, according to the distinction pointed out by Reilly et al. [26], they were closer to decision rules,. However, none of the rules had completed the formal impact analysis to determine whether they improve outcomes when used in clinical practice. Thus, when using a CPR, clinicians usually do not know the effect on patient care, whereas it is a requirement for a clinical decision rule.

Our findings were very congruent with those of Maguire et al. [6] in their systematic review of pediatric CPRs, which carries weight to the comparison with methodological quality of adult CPRs, despite our non exhaustive CPRs sample. The most important quality deficiencies found by Maguire et al. that affected the majority of studies were inadequate blinding of predictor variables and outcomes, limited assessment of the reproducibility of predictor variables, and insufficient study power [6]. When investigators or clinicians evaluate tests non-blinded to the disease status, they may be influenced by their beliefs in the new test, and unconsciously overestimate its performance. At the level of the entire clinical decision rule based on one of these tests, it may also lead to an overestimation of diagnostic performance [24, 25]. All diagnostic studies, as well as clinical decision rules, have to raise the issue of inter-observer reliability of predictor variables. Indeed, rules are designed to be used by many different clinicians, from different medical background, in different settings, in different department and countries with variation in their medical culture. Therefore, testing rules across observers is essential to assess their performance similarities (or not) when they will be propose to a wide range of physicians [1, 3]. The last weakness pointed out by both McGuire review and ours was the insufficient power for statistical modeling. This is related to the fact that clinical decision rules are not used to a priori estimate the number of patients required. It thus leads to inappropriate ratio number predictors included in the final equation/numbers of events, despite the recent simulation studies demonstrating that a ratio of 7 events per predictors would be acceptable [27]. Because Maguire et al. had a different score of reporting CPRs performances, they established it was insufficient. However, we showed that all CPRs reviewed reported at least two parameters out of the six possible diagnostic test accuracy parameters. Maguire et al. also debated and raised concern on the rigid structure of CPRs and their objectives of achieving very high performance so that CPRs could be considered of interest. The authors pointed out that these rigid and high-level goals were unrealistic and thus lead to non-used CPRs. They suggested that CPRs builders may think about conciliating high-level performance objectives and realities of pediatric practice, in order to derive more flexible and useful CPRs. This is particularly true in areas of daily practice, such as fever without source of example, where clinicians judgment alone is weak, and inter-physicians variability very high. Thus, we concluded that there is room in such areas for deriving warranted, useful and applicable CPRs, even if their performance are not as high as expected according to the rigid high-level initial objectives required. Interestingly, they moved forward suggesting that CPRs for child health conditions should include a decision-aids aspect, and incorporate perceptions and preferences of parents and children into the decision-making process. Notably, none of the CPRs reviewed by Maguire et al. used data mining tools for their derivation, whereas two CPRs included in our review were based on CART (Classification and regression trees) portioning [10, 13]. Nevertheless, science is evolving toward proteomics and genomics, that provide very quickly very powerful tools in daily practice on a very reasonable amount of blood in a short time delay. In the coming years, CPRs will be faced with the task of integrating these variables into their derivation, which means evaluation of classical statistics modeling to data mining procedures. However, the challenge for investigators who will derive CPRs in the future will be to keep in mind that CPRs goal remains to empower clinicians with data, regardless of how complex the data structure and analyses is.

Several limitations must be addressed. First, our review is not a systematic and exhaustive review of all CPRs published in pediatric clinical practice. An electronic search would have missed articles giving that CPRs did not have clear MeSH terms quoted in electronic databases. However, we limited our manual search to the main English, general and high-impact factor pediatric journals. This might have introduced a selection bias, including only “the best” CPRs, overestimating the pediatric CPRs methodological quality and thus missing methodological defaults in CPRs. To estimate this bias, our results were compared with those of Maguire et al. who performed a systematic search for all pediatric CPRs ever published and we did not find significant difference. Indeed, selection bias is not as strong as may be thought. Second, all CPRs reviewed were from pediatric journals, and most often from ‘Pediatrics’ (9/12 [11, 12, 15-21]), which did not allow us to compare the CPRs methodological quality (i) between general and pediatric journal, and (ii) within pediatric journals. Third, the comparison between a series of adult CPRs published and reviewed in 1997 and pediatric CPRs published in 2006 might have been in favor of the pediatric ones because they were more recent. However, adult CPRs were considered older as they were started before (1980s) than the pediatric ones (mid 1990s) (17 years of age compared with 10 years of age).

To conclude, there is a need and possibility for high-level performance, methodologically robust, and validated CPRs in pediatrics in order to improve child health outcomes, facilitate daily practice and limit the useless procedures and treatment. However, we identified several methodological drawbacks in CPRs derivation that, once been corrected, will help their development and implementation in clinical practice.

Acknowledgements

We gratefully thank Philip Karanja, University of Lancaster, and Prof Gérard Bréart from U953 INSERM, Paris, France for helpful comments.

Funding

Sandrine Leroy was supported by a grant from the French Society of Nephrology, by la Fondation pour la Recherche Médicale.

Conflict of interest

The author declares that she has no conflict of interest.

Références

1.Laupacis, A., Sekar, N. & Stiell, I. G. (1997). “Clinical Prediction Rules. A Review and Suggested Modifications of Methodological Standards,” JAMA. 277:488—94.

Publisher – Google Scholar

2. Mcginn, T. G., Guyatt, G. H., Wyer, P. C., Naylor, C. D., Stiell, I. G. & Richardson, W. S. (2000). “Users’ Guides to the Medical Literature: XXII: How to Use Articles about Clinical Decision Rules. Evidence-Based Medicine Working Group,”JAMA. 284:79—84.

Publisher – Google Scholar

3. Stiell, I. G. & Wells, G. A. (1999). “Methodologic Standards for the Development of Clinical Decision Rules in Emergency Medicine,” Annals of Emergency Medicine, 33:437—47.

Publisher – Google Scholar

4. Wasson, J. H., Sox, H. C., Neff, R. K. & Goldman, L. (1985). “Clinical Prediction Rules. Applications and Methodological Standards,” The New England Journal of Medicine; 313:793—9.

Publisher – Google Scholar

5. Stiell, I. G., Greenberg, G. H., Mcknight, R. D., Nair, R. C., Mcdowell, I. & Worthington, J. R. (1992). “A Study to Develop Clinical Decision Rules for the Use of Radiography in Acute Ankle Injuries,” Annals of Emergency Medicine,21:384—90.

Publisher – Google Scholar

6. Maguire, J. L., Kulik, D. M., Laupacis, A., Kuppermann, N., Uleryk, E. M. & Parkin, P. C. (2011). “Clinical Prediction Rules for Children: A Systematic Review,” Pediatrics. 128:E666—77.

Publisher – Google Scholar

7. Moher, D., Liberati, A., Tetzlaff, J. & Altman, D. G. (2009). PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement, BMJ. 339: B2535.

Publisher – Google Scholar

8. Leroy, S. (2011). ‘Construction et Validation des Règles de Décision Cliniue En Pédiatrie: Südwestdeutscher Velag Für Hochschukschriften AG & Co,’ KG.

9. Concato, J., Feinstein, A. R. & Holford, T. R. (1993). “The Risk of Determining Risk with Multivariable Models,” Annals of Internal Medicine, 118:201—10.

Publisher – Google Scholar

10. Ambalavanan, N., Baibergenova, A., Carlo, W. A., Saigal, S., Schmidt, B. & Thorpe, K. E. (2006). “Early Prediction of Poor Outcome in Extremely Low Birth Weight Infants by Classification Tree Analysis,” The Journal of Pediatrics,148:438—44.

Publisher – Google Scholar

11. Ambalavanan, N., Carlo, W. A., Shankaran, S. et al. (2006). “Predicting Outcomes of Neonates Diagnosed with Hypoxemic-Ischemic Encephalopathy,” Pediatrics. 118:2084—93.

Publisher – Google Scholar

12. Avery, R. A., Frank, G., Glutting, J. J. & Eppes, S. C. (2006). “Prediction of Lyme Meningitis in Children from a Lyme Disease-Endemic Region: A Logistic-Regression Model Using History, Physical, and Laboratory Findings,” Pediatrics.117:E1—7.

Publisher – Google Scholar

13. Dunning, J., Daly, J. P., Lomas, J. P., Lecky, F., Batchelor, J. & Mackway-Jones, K. (2006). “Derivation of the Children’s Head Injury Algorithm for the Prediction of Important Clinical Events Decision Rule for Head Injury in Children,”Archives of Disease in Childhood, 91:885—91.

Publisher – Google Scholar

14. Egami, K., Muta, H., Ishii, M. et al. (2006). “Prediction of Resistance to Intravenous Immunoglobulin Treatment in Patients with Kawasaki Disease,” The Journal of Pediatrics, 149:237—40.

Publisher – Google Scholar

15. Fu, L. Y., Ruthazer, R., Wilson, I. et al. (2006). “Brief Hospitalization and Pulse Oximetry for Predicting Amoxicillin Treatment Failure in Children with Severe Pneumonia,” Pediatrics. 118:E1822—30.

Publisher – Google Scholar

16. Jones, O. Y., Spencer, C. H., Bowyer, S. L., Dent, P. B., Gottlieb, B. S. & Rabinovich, C. E. (2006). “A Multicenter Case-Control Study on Predictive Factors Distinguishing Childhood Leukemia from Juvenile Rheumatoid Arthritis,”Pediatrics. 117:E840—4.

Publisher – Google Scholar

17. Marais, B. J., Gie, R. P., Hesseling, A. C. et al. (2006). “A Refined Symptom-Based Approach to Diagnose Pulmonary Tuberculosis in Children,” Pediatrics. 118:E1350—9.

Publisher – Google Scholar

18. Mikaeloff, Y., Caridade, G., Assi, S., Suissa, S. & Tardieu, M. (2006). “Prognostic Factors for Early Severity in a Childhood Multiple Sclerosis Cohort,” Pediatrics. 118:1133—9.

Publisher – Google Scholar

19. Romano, E., Tremblay, R. E., Farhat, A. & Cote, S. (2006). “Development and Prediction of Hyperactive Symptoms from 2 to 7 Years in a Population-Based Sample,” Pediatrics. 117:2101—10.

Publisher – Google Scholar

20. Smeesters, P. R., Campos, D., Jr., Van Melderen, L., De Aguiar, E., Vanderpas, J. & Vergison, A. (2006). “Pharyngitis in Low-Resources Settings: A Pragmatic Clinical Approach to Reduce Unnecessary Antibiotic Use,”Pediatrics. 118:E1607—11.

Publisher – Google Scholar

21. Smith, G. C. S. & White, I. R. (2006). “Predicting the Risk for Sudden Infant Death Syndrome from Obstetric Characteristics: A Retrospective Cohort Study of 505,011 Live Births,” Pediatrics. 117:60—6.

Publisher – Google Scholar

22. Fermanian, J. (1984). “Measurement of Agreement between 2 Judges. Qualitative Cases,” Revue D’épidémiologie et de Santé Publique, 32:140—7.

Publisher – Google Scholar

23. Fermanian, J. (1984). “Measuring Agreement between 2 Observers: A Quantitative Case,” Revue D’épidémiologie et de Santé Publique, 32:408—13.

Publisher – Google Scholar

24. Whiting, P., Rutjes, A. W., Reitsma, J. B., Bossuyt, P. M. & Kleijnen, J. (2003). “The Development of QUADAS: A Tool for the Quality Assessment of Studies of Diagnostic Accuracy Included in Systematic Reviews,” BMC Medical Research Methodology, 3:1—13.

Publisher – Google Scholar

25. Bossuyt, P. M., Reitsma, J. B., Bruns, D. E. et al. (2003). “Towards Complete and Accurate Reporting of Studies of Diagnostic Accuracy: The STARD Initiative,” BMJ. 326:41—4.

Publisher – Google Scholar

26. Reilly, B. M. & Evans, A. T. (2006). “Translating Clinical Research into Clinical Practice: Impact of Using Prediction Rules to Make Decisions,” Annals of Internal Medicine, 144:201—9.

Publisher – Google Scholar

27. Vittinghoff, E. & Mcculloch, C. E. (2007). “Relaxing the Rule of Ten Events per Variable in Logistic and Cox Regression,” American Journal of Epidemiology, 165:710—8.

Publisher – Google Scholar