Introduction

Over the past few years, the use of online social networks has become particularly commonplace in the tourism sector. These days, TripAdvisor is used as a major tool in helping select a tourist destination or activity. As highlighted by Paquerot et al. (2011), tourism is an intangible and experiential proposition whose physical attributes (atmosphere, hospitality, etc.) are difficult to rate. Thus, reliance on the opinion of other consumers has become more and more common when preparing a trip or visit to a tourist attraction. In fact, the number of people consulting online review platforms when selecting a tourist destination is growing (Buhalis and Law, 2008). According to Filieri et al. (2015), 200 million travellers will consult platforms like TripAdvisor when planning a visit to a specific destination (travel, restaurant, hotel, etc.). Thus, better understanding the way these online ratings are perceived, and the way they impact behaviour, is an important issue for many tourist and cultural organisations, whose e-reputation may be affected by this type of platform (Hennig-Thurau et al. 2004 in Filieri et al. 2015).

Equally, this issue is even more important for tourist and cultural attractions located in areas that have a large number of historical attractions. This is true for the Loire Valley with its chateaus (e.g. Domaine National de Chambord), gardens (e.g. Villandry), museums (Musée des Beaux-Arts), and theme parks (e.g. Zoo de Beauval). In such cases, a tourist will use a review platform before (to prepare the trip) and after his/her visit (Xiang and Gretzel, 2010).

A large number of marketing studies examine these online reviews. But oddly, the majority focus on the qualitative dimension of the reviews and primarily look at the written comments posted by Internet users. Some researchers even see an opportunity to carry out netnographic studies (e.g. Bédé and Massa, 2017). Other studies aim to better understand the strategies of hotels and tourist attractions when handling comments, and their relationship to users (generally also developing comprehensive qualitative approaches).

The purpose of this project is to approach the phenomenon differently by addressing a resolutely positivist and relatively simple issue: is there a significant link between (virtual and actual) visits to a tourist attraction and the number of “quantitative” reviews it receives on TripAdvisor?

In our view, this issue merits particular attention for two main reasons. The first is that these reviews, which correspond to 5-point Likert scale, are immediately visible on a TripAdvisor page (cf. inset 1). Accessing written comments involves scrolling down the page and careful reading.

The second is that the perceived credibility and quality of reviews relies, in part, on the number of reviews, so that it is easier to exclude what Munzel (2015) refers to as “deceptive opinion spam”. There are more scale-type ratings than written comments, which makes it possible to verify whether there is a correlation between the TripAdvisor score and the number of physical visits to a site, irrespective of the number of reviews.

Example of a TripAdvisor page

In order to address the issue at the heart of this paper, the first part of the literature review focuses on how online reviews are perceived. In the second part we put forward the main consequences associated with the existence of these online platforms, as studied within the tourism and marketing sectors. Based on the literature review, the research hypotheses are formulated then tested empirically. The quantitative study is based on reviews of 104 tourist attractions (with over 10,000 annual visitors), the Loire Valley region’s main attractions. All the information relating to visits to the attraction and its web pages are set out in the methodology section. Finally, the results show that the quantitative reviews that appear on TripAdvisor have very little influence on the number of physical and virtual visits to the major tourist and cultural attractions.

Literature Review

Perceptions of online ratings

The main perceived characteristics of online ratings are:

- The content’s perceived trustworthiness (Mauri and Minazzi, 2013; Xu, 2014; Casaló et al. 2015)

- The content’s perceived usefulness (Casaló et al. 2011, 2015)

- A diminution in the perceived risk making of decision (Gretzel, Yoo and Purifoy, 2007)

Perceived trustworthiness is a concept that can be understood in different ways and that depends more on individual judgement than on the inherent characteristics of a source (e.g. trust, authenticity, transparency, competence, integrity; Gurviez and Korchia, 2002; Johnson and Kaye, 2009). This essentially relies on written comments, especially on reviewer expertise (e.g. Racherla and Friske, 2012) and on readability (Korfiatis, Garcia-Bariocanal and Sanchez-Alonzo, 2012). It therefore does not appear to be related to metric evaluations, even though a high rating may mean that the Internet user will give more weight to the level of trust given to the ratings.

Perceived utility is also a subjective assessment as it relates to attitude (positive or negative valence, and greater or lesser intensity) based on how the cost-benefit ratio of a product or service’s use is perceived (e.g. Davis, 1989). Thus, the issues raised in written comments may provide information sought by travellers whereas metric evaluations simply serve to confirm or overturn the overall expected quality of a service provided (reception, hotel, etc.).

Finally, the decision to book an unknown destination or to decide to visit a cultural site involves the idea of reducing the risk perception associated with this decision (Gretzel, Yoo and Purifoy, 2007). In fact, individuals naturally seek to identify the positive and negative aspects of a destination they plan to visit (Chen and Uysal, 2002) ; “Online consumer reviews can be considered a form of e-WOM” (Hennig-Thurau et al. 2004 in Filieri et al. 2015).

Further, the online reviews viewed on official websites, (e.g. TripAdvisor) are perceived as being:

- More trustworthy than reviews posted directly on organisation sites (e.g. Bansal and Voyer, 2000 ; Casaló et al. 2015) ;

- More trustworthy than travel agents and mass media (e.g. Dickinger, 2011)

These official platforms also offer the advantage of standardising the featured measures and their presentation: it is therefore easier for an individual to compare two or more destinations. Of course, the trust placed in reviews applies essentially to written comments. Note that on this subject, Plotkina, Munzel and Pallud (2017) have developed an algorithm that enables an 81% detection rate for false advice. Thus, the application makes it possible to limit fraudulent practices in which, for example, hotel managers encourage employees to post negative reviews on competitor sites (Filieri et al., 2015).

Thus, and in a complementary way, other studies have focused on examining the profile of the reviewer (e.g. Lee et al., 2011) and show that those who provide most opinions on review platforms are those who travel most, who most negatively evaluate destinations, regardless of gender or age.

For Filieri et al. (2015), who recommend a global approach to the phenomenon, in the end, the main factors that positively influence trust in TripAdvisor are:

- Website quality (hyperlinks, functions, page loading speed),

- Information quality (timely, relevant, complete, valuable, useful),

- And customer satisfaction (overall satisfaction in using TripAdvisor).

With respect to metric evaluations, the only measures that Internet users can use to assess their quality are:

- The number of reviews (the higher the number of reviews, the more the reliable the information seems)

- Consistency in the valence of opinions (Racherla and Friske, 2012; Wu, 2013), because responses distributed evenly across all scores, “Excellent”/”Very good”/”Average”/”Poor”/”Terrible”, reflects inconsistency in the quality of service delivered.

Now that the elements relating to how these platforms are perceived have been presented, let us examine the impact of online consumer reviews.

Influences Of Online Ratings

The consequences to visiting online review platforms are numerous and have been the subject of many studies. Filieri et al. (2015) very succinctly summarise the idea by stating: “online consumer reviews influence consumer decisions of where to go on holiday, which accommodation to book, and once there, which attractions to visit and where to go to eat (e.g. Dickinger, 2011; Sparks, Perkins and Buckley, 2013)”.

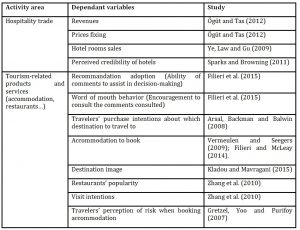

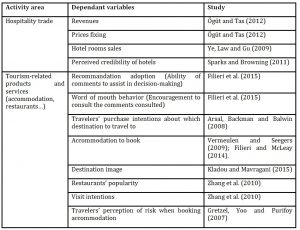

The main studies on the subject within the tourism sector (table 1) have focused on two main approaches:

- An approach focused on the organisation, known as “Hospitality trade”,

- An approach focused on individuals, known as “Tourism-related products and services”

Table 1: Examples of the Impact of Online Consumer Reviews

Studies that examine consumer behaviour have shown the extent to which the perception of online consumer reviews influences the intention to follow the recommendations shown on the platform, to encourage loved ones to consult consumer reviews on this and travellers’ purchases. Beerli and Martin (2004) have also shown that a destination’s image may suffer as a consequence of negative word of mouth, particularly when it is a result of bad reviews on dedicated platforms. Studies that have focused on analysing organisations identify a positive correlation between good reviews on TripAdvisor on the one hand, and business turnover, high prices and the hotel’s image.

However, it is important to specify that these results have been acquired through two types of data collection:

- One involves the recoding of written comments. The influence of key words on, for example, a destination’s perceived image is thereby tested (e.g. Kladou and Mavragani, 2015). In this study, these key words were analysed by category: cognitive, affective and conative vs. cultural environment, natural environment, atmosphere, infrastructure and socioeconomic environment, whilst also studying the positive/negative valence of the key words used.

- The other consists of measuring the attitudes and perceptions of a sample group of consumers in relation to reviews on a tourist website (e.g. Filieri, Alguezaui and McLeavy, 2015). This type of data collection is carried out using a questionnaire and is based on psychometrics (survey study).

These two types of study have the advantage of being closely focused on consumer opinion, based on the body of information generated on platforms or on declared data. However, they do not systematically take into account respondents’ motives (e.g. family travel, travel as a couple, travel for work) that may sometimes affect the choice of destination (Banerjee and Chua, 2016). These motives may equally lie behind differences in how an attribute that is outside the searcher’s control is perceived. For example, a restaurant’s design may be the source of an important cognitive distortion for a couple celebrating a wedding anniversary – something that might have little importance to a busy businessman. Further, as is often true for many studies, these data are subject to a declared bias (e.g. social desirability within a survey), or an interpretation bias during the coding stage.

Another option involves using only observable data, such as the destination rating scores that appear on platforms (on the scale “Excellent”/”Very good”/”Average”/”Poor”/”Terrible” and presented in table 1). As far as we are aware, no study within the tourism sector has used this type of variable to test impact on visits to an attraction. These data may, in fact, appear superficial as the qualitative assessment has greater meaning in terms of experiential feedback. However, these ratings are the first visible measure seen by individuals visiting platforms such as TripAdvisor.

For these reasons, and based on the literature review set out above, two working hypotheses may be formulated.

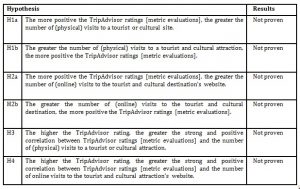

As ratings that appear on sites such as TripAdvisor engender cognitive and conative responses (intention to travel, increased popularity of a destination; cf. table 1), we put forward the following hypotheses:

H1a: The more positive the TripAdvisor metric evaluations, the greater the number of physical visits to a tourist and cultural destination.

H1b: The more physical visitors a tourist and cultural destination has, the more positive the metric evaluations featuring on TripAdvisor.

H2a: The more positive the TripAdvisor metric evaluations, the greater the number of online visits to a tourist and cultural attraction.

H2b: The greater the number of online visits a tourist and cultural destination has, the more positive the metric evaluations featuring on TripAdvisor.

In parallel, and because trust plays a determining role in the perceived credibility of TripAdvisor ratings, we put forward the following hypotheses:

H3: The higher the TripAdvisor rating, the greater the strong and positive correlation between the metric evaluations appearing on TripAdvisor and the physical visits to a tourist and cultural destination.

H4: The higher the TripAdvisor rating, the greater the strong and positive correlation between the metric evaluations appearing on TripAdvisor and the number of visits to the tourist and cultural destination website.

To test this hypothesis, it is therefore necessary to have access to the tourist or cultural destination ratings that appear on TripAdvisor, the number of visitors to the attraction, and information relating to visits to its website.

Research Methodology

Recognised as a World Heritage Centre by UNESCO in 2000, the Loire Valley received over 9 million visitors to its tourist attractions during 2016. This empirical study is based on an analysis of the 124 top tourist attractions of the Loire Valley that have over 10,000 visitors per year and whose data are not withheld. This includes:

- The Loire châteaus (Chinon, Chambord, Loches, etc.)

- Museums (de Sologne, de céramique contemporaine, Rabelais, etc.)

- Gardens (Parc floral d’Apremont, the gardens and parks of Sasnières, etc.)

- Other sites of touristic interest (Wildlife conservatory, the cellars of Vouvray producers

- Cultural events that involve consumer participation (Game Fair in the Loir and Cher, Adventure Park, etc.)

- Cultural events where the consumer is a spectator (Les Printemps de Bourges, Musikenfete, etc.).

For the 124 selected sites, analyses necessarily focus on those that have an independent website and a TripAdvisor profile. For each of these sites, three types of data were collected:

- The number of actual entries to the tourist attraction during 2016 (with no distinction made between free and paid entries) and a measure of the level of appeal that the establishment brings to the region

- Measures showing the online browsing experience for the website being analysed (average browsing time, number of pages viewed, etc.)

- The number of TripAdvisor reviews (ranging from “Horrible” to “Excellent”) as a percentage used to calculate an e-reputation score (simple weighting = 1 * %horrible + 2 * %mediocre + 3 * %average + 4 * %very good + 5 * %excellent). This score therefore counteracts the number of general reviews given (most often correlated to the number of site visits), exclusively reflecting the distribution of reviews (the higher the score, the higher the site’s rating). The tourist and cultural attraction’s average score as it appears on TripAdvisor (“Overview” tab) was also recorded.

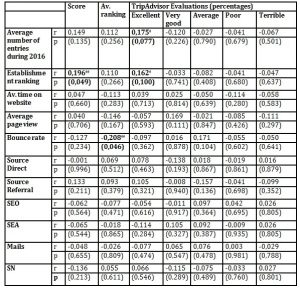

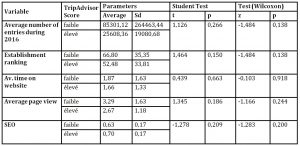

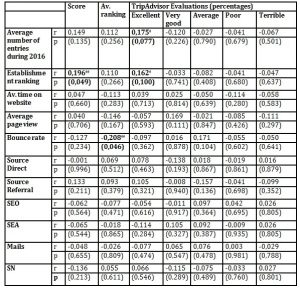

These measures and the population studied are presented in table 2.

Given the nature of the data, the approach to data processing is relatively straightforward: correlation analysis (Pearson), variance analysis (Fisher), average comparison (Student), and non-parametric test (Wilcoxon). The threshold used to test the hypothesis is p ≤ 10% as the population studied is limited (N=124)

Table 2: Sample characteristics and main measures

The differences observed between the types of tourist attraction studied are significant for the number of physical visits during 2016 (F = 2,20; p = .057), the average time spent on a site’s Internet page (F = 4,993; p < .001) and the number of web pages consulted (F = 2,792; p = .021). For all other measures, the differences found are not significant (.289 < p < .978). With respect to the number of specific visits, the distribution within these groups varies enormously. This is explained by the presence of a regional catalyst (e.g. Beauval Zoo registered 1,350,000 entries during 2016, the Domaine National de Chambord had 728,133 visitors). These disparities between groups do not introduce any particular bias as the groups are not processed in isolation (as the purpose is not to compare different types of tourist attractions).

Results

Link between Tripadvisor Ratings and Behavioural Responses

With respect to the physical appeal of the Loire Valley tourist and cultural attractions, there is also a significant (p = .077), weak and positive (r = .175) correlation between the number of “Excellent” ratings and the number of visits to an attraction. However, the TripAdvisor e-reputation score, the average score, and the percentages relating to other scores do not correlate with the number of physical visits to an attraction (table 3). Establishment ranking, which balances out the heterogeneous deviations between each unit of analysis, has a positive (p = .049) but weak (r = .196) correlation to the TripAdvisor e-reputation score, and to the ratio of “Excellent” scores but in a less conclusive way (r = .162; p = .100). With respect to these relatively weak correlations (.162 < r < .175) and the associated significance levels (5% < p < 10%), it is at this stage difficult to confirm or refute hypotheses H1a and H1b; particularly as the number of visits to tourist and cultural attractions does not correlate to the e-reputation score (p = .135) nor to the average score shown on TripAdvisor (p=.256).

Neither do the scores shown on TripAdvisor correlate to the different measures used in this study to assess Internet user activity on tourist and cultural site web pages. In particular, it is very much the case that the variables for “Average browsing time” and “Number of pages visited” are the best measures of the appeal of web pages visited in this study. Only the bounce rate (reflecting the percentage of visitors who leave the page as soon as they land) show a weak and negative correlation (r = -.208; p = .046) with the average rating shown on the TripAdvisor site. Therefore, hypotheses H2a and H2b are not proven.

Table 3: Correlation matrix

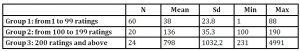

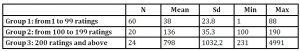

Impact of Ratings’ Perceived Trustworthiness

In order to study the impact of TripAdvisor online ratings’ perceived trustworthiness, three groups were formed (table 4). The first includes destinations that have less than 100 ratings, the second includes those that have between 100-2—ratings, and the third is made up of destinations that have over 200 ratings. These thresholds were set arbitrarily to match the tiers (by 100). Variance within the last level is much higher than in the preceding two groups, but the number of attractions in the last group did not make it possible for us to separate them into more homogenous sub groups.

Table 4: Description of the TripAdvisor groups

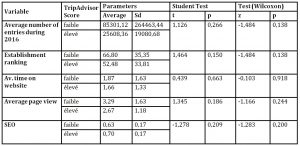

The measures relating to visits to tourist and cultural attractions (Number of entries during 2016 and the establishment ranking) do not correlate with the TripAdvisor metric evaluations (table 5). Only the “Establishment ranking” indicator correlates significantly (p .870; p < .001). Thus, we are able to conclude that the number of TripAdvisor ratings has no impact on the correlation between the number of visits to a tourist and cultural attraction, and the quality of the TripAdvisor metric evaluations.

Table 5: Correlation matrix by group

In order to check the validity of these results, we tested their reciprocity. The purpose is to find out whether the tourist and cultural attractions that received positive ratings on TripAdvisor are visited more often than those that received less favourable results.

Comparison between TripAdvisor scores

Two groups were formed based on e-reputation scores. The “High TripAdvisor Score” is made up of the 25 tourist and cultural attractions that received the best ratings, and the “Low TripAdvisor Score ” is made up of the 25 sites that received less satisfactory ratings (the other sites were excluded from this analysis in order to maximise the variance observed between these two groups).

The comparison between these two groups shows that there is no significant difference between the number of visitors during 2016 [(t)p = .266 ; (W)p = .138)], the establishment ranking [(t)p = .150 ; (W)p = .138)], average browsing time [(t)p = .439 ; (W)p = .918)], the number of pages visited [(t)p = .186 ; (W)p = .244)], and the natural standard [(t)p = .209 ; (W)p = .200)].

Table 6: Low TripAdvisor Score (N=25) or High TripAdvisor Score (N=25)

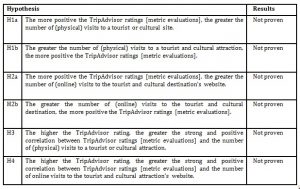

These results therefore make it possible to ascertain that none of the hypotheses set out in the literature review have been proven (table 7).

Table 7: Results summary

Discussion and Conclusion

The main contribution of this study is to show that there is no correlation between TripAdvisor ratings and visits to a tourist and cultural attraction. However, these results are based on the context studied and the choice of data analysed.

With respect to the context, this study exclusively examines the major tourist and cultural attractions of the Loire Valley, with an annual number of visits exceeding 10,000 consumers. It relates to must-see regional sites and it is likely that TripAdvisor ratings have little impact on the planning of visits, compared to the choice of restaurant or hotel as is true in other studies (e.g. Ögüt and Tas, 2012 ; Filieri and McLeay, 2014). Therefore, this is a limitation as well as a managerial contribution because, as far as we are aware, this type of establishment has not been specifically studied in the past. New studies could extend the population of sites studied to those with less visitors, but access conditions and the number of annual visitors is harder to ascertain as they are either not listed or shared.

As stated by Banerjee and Chua (2016), it is also worth taking into account the motives of individuals when they consult online reviews. In the case of organised tours (such as those that exist for the Loire Valley) and group travel, it is easy to imagine that the effect of consulting reviews on TripAdvisor as to whether or not to visit a site is negligible.

The decision to exclusively study scaled ratings such as those found on TripAdvisor may also be seen as a limitation. The scope of this study in fact excludes the analysis of written comments, which has already been carried out in many other studies (e.g. Kladou and Mavragani, 2015). Studies that focus exclusively on ratings that are quantitative and properly validated on TripAdvisor are more rare. The results of this study confirm that, in fact, written comments largely correlate with an individual’s cognitive and behavioural responses (cf. results of studies presented in the literature review) unlike the quantitative ratings that appear on platforms such as TripAdvisor. In order to support this finding, it would however be worth including a new variable taken from the recoding of written comments for the sites studied in order to simultaneously study the effect of written comments and quantitative ratings. Producing such data would enable a more refined analysis of the phenomenon studied because Fong and his colleagues (2016) identify a problem of asymmetry of Hotel Ratings on TripAdvisor: “An extreme rating (e.g. “excellent” or “terrible”) may also be associated with reviews featuring both positive and negative comments”. An examination of the level of this asymmetry would, for example, make it possible to better explain why our hypotheses were not proven by this study. In this case, it would be necessary to use an algorithm developed by Plotkina, Munzel and Pallud (2017) in order to eliminate the fake reviews found in the written comments of online reviews.

References

- Arsal, I., Backman, S., and Baldwin, E. (2008). ‘Influence of an online travel community on travel decisions’. In P. O’Connor, W. Hopken, & U. Gretzel (Eds.), Information and communication technologies in tourism 2008 (pp. 82-93). Vienna, Austria: Springer-Verlag.

- Banerjee S. and Chua A.Y.K. (2016), ‘In search of patterns among travellers’hotel ratings in Tripadvisor’, Tourism Management, 53, 125-131.

- Bansal H.S. and Voyer P.A. (2000), ‘Word-of-mouth processes within a services purchase decision context’, Journal of Services Research, 3 (2) 166-177.

- Bédé S. and Massa C. (2017), ‘Oenotourisme et consommation : une netnographie pour déterminer les éléments clés de l’expérience de visite d’une cave’, Proceeding of Association Française of Marketing Congress, Tours.

- Beerli, A.,and Martin,J.D. (2004), ‘Factorsinfluencing destinationimage’, Annals of TourismResearch, 31(3), 657–681.

- Buhalis, D., and Law, R. (2008), ‘Progress in information technology and tourism management: 20 years on and 10 years after the internet – The state of eTourism research’, Tourism Management, 29(4), 609-623.

- Casaló, L.V., Flavián, C., and Guinalíu, M. (2011), ‘Understanding the intention to follow the advice obtained in an online travel community’, Computers in Human Behavior, 27, 622–633.

- Casaló L.V., Flaviána C., Guinalíua M., and Ekinci Y. (2015), ‘Do online hotel rating schemes influence booking behaviors?’, International Journal of Hospitality Management, 49, 28-36.

- Chen, J.S., and Uysal, M.(2002), ‘Market positioning analysis: A hybrid approach’, Annals of Tourism Research, 29(4), 987–1003.

- Davis, F., (1989), ‘Perceived usefulness, perceived ease of use, and user acceptance of information technology’, MIS Quarterly, 13 (3), 319–340.

- Dickinger, A. (2011), ‘The trustworthiness of online channels for experience- and goal-directed search tasks’, Journal of Travel Research, 50(4), 378-391.

- Filieri R., Alguezaui S. and McLeay F. (2015), ‘Why do travelers trust TripAdvisor? Antecedents of trust towards consumer-generated media and its influence on recommendation adoption and word of mouth’, Tourism Management, 51, 174-185.

- Filieri, R., and McLeay, F. (2014), ‘E-WOM and accommodation: an analysis of the factors that influence travelers’ adoption of information from online reviews’, Journal of Travel Research, 53(1), 44-57.

- Fong L.H.N., Lei S.S.L. and Law R. (2016), ‘Asymmetry of Hotel Ratings on Trypadvisor: Evidence from Single – Versus Dual – Valence Reviews’, Journal of Hospitality Marketing & Management, 26(1), 67-82.

- Gretzel, U, Fesenmaier, D, Formica, S, and O’Leary, J, (2006), ‘Searching for the future: Challenges faced by destination marketing organizations’, Journal of Travel Research, 45(4), 116–126.

- Gretzel, U., Yoo, K. H., and Purifoy, M. (2007), ’Online travel review study: Role and impact of online travel reviews’. Austin, TX: Laboratory for Intelligent Systems in Tourism, Texas A&M University.

- Gurviez P. and Korchia M. (2002), ‘Proposition d’une échelle de mesure multidimensionnelle de la confiance dans la marque’, Recherche et Applications en Marketing, 17 (3), 41-61.

- Hennig-Thurau, T., Gwinner, K. P., Walsh, G., and Gremler, D. D. (2004), ‘Electronic word of mouth via consumer-opinion platforms: what motivates consumers to articulate themselves on the internet?’, Journal of Interactive Marketing, 18(1), 38-52.

- Johnson, T.J., and Kaye, B.K. (2009), ‘In blogs we trust? Deciphering credibility of components of the internet among politically interested internet users’. Computers in Human Behavior. 25, 175–182.

- Kladou S. and Mavragani (2015), ‘Assessing destination image : An online marketing approach and the case of TripAdvisor’, Journal of Destination Marketing & Management, 4, 187-193.

- Korfiatis, N., García-Bariocanal, E., and Sánchez-Alonso, S. (2012), ‘Evaluating content quality and helpfulness of online product reviews: The interplay of review helpfulness vs. Review content’, Electronic Commerce Research and Applications, 11(3), 205-217.

- Kozinets, R, (1999), ‘E-tribalized Marketing?: The strategy implications of virtual communities of consumptions’. European Management Journal, 17(3), 252-264.

- Lee HA, Law R, and Murphy J. (2011), ‘Helpful reviewers in TripAdvisor : an online community’, Journal of Travel & Tourism Marketing, 28, 675-688.

- Mauri, A. and Minazzi, R. (2013), ‘Web reviews influence on expectations and purchasing intentions of hotel potential customers’, International Journal of Hospitality Management, 34, 99–107.

- Mayzlin D., Dover Y. and Chevalier J. (2014), ‘Promotional Reviews: An Empirical Investigation of Online Review Manipulation’, American Economic Review, 104(8), 2421–2455.

- Miguens J, Baggio R and Costa C, (2008), ‘Social Media and tourism destination : tripadvisor case study’, Advances in tourism research, Aveiro, Portugal.

- Munzel A. (2015), ‘Malicious practice of fake reviews: Experimental insight into the potential of contextual indicators in assisting consumers to detect deceptive opinion spam’. Recherche et Applications en Marketing, Vol. 30 Issue 4, p24-50.

- O’Connor, P, (2008), ‘User-Generated Content and Travel: A Case Study on Tripadvisor.Com’, Information and Communication Technologies in Tourism 2008, Austria: P. O’Connor, W. Höpken and U. Gretzel eds., Springer Wien New York, pp. 47-58.

- O’Connor, P, (2010), ‘Managing a hotel’s image on tripadvisor’, Journal of hospitality marketing and management, p754-772.

- Ögüt H. and Tas BKO (2012), ‘The influence of internet customer reviews on the online sales and prices in hotel industry’, Service Industries Journal, 32, 197-214.

- Paquerot, M ; Queffelec A ; Sueur I and Biot-Paquero G (2011), ‘L’e-réputation ou le renforcement de la gouvernance par le marché de l’hôtellerie ?’, Revue Management et Avenir, 45, p280-296.

- Plotkina D., Munzel A. and Pallud J. (2017), ‘Don’t let them fool you. Detecting fake online reviews’, Procceding of Association Française of Marketing Congress, Tours, France.

- Racherla P. and Friske W. (2012), ‘Perceived “usefulness” of online consumer reviews: An exploratory investigation across three services categories’, Electronic Commerce Research and Applications, 11(6), 548-559.

- Sparks, B. A., and Browning, V. (2011), ’The impact of online reviews on hotel booking intentions and perception of trust’, Tourism Management, 32(6), 1310-1323.

- Sparks, B. A., Perkins, H. E., and Buckley, R. (2013), ‘Online travel reviews as persuasive communication: the effects of content type, source, and certification logos on consumer behavior’, Tourism Management, 39, 1-9.

- Thevenot, G, (2007), ‘Blogging as a social media’, Tourism and Hospitality Research, 7(3/4), 282–289.

- Vermeulen, I. E., and Seegers, D. (2009), ‘Tried and tested: the impact of online hotel reviews on consumer consideration’. Tourism Management, 30(1), 123-127.

- Wu P.F. (2013), ‘In search of negativity bias: An empirical study of perceived helpfulness of online reviews’, Psychology & Marketing, 30(11), 971-984.

- Xiang, Z. and Gretzel, U. (2010), ‘Role of social media in online travel information search’, Tourism Management, 31, 179–188.

- Xu, Q. (2014), ‘Should I trust him? The effects of reviewer profile characteristics on eWOM credibility’, Computers in Human Behavior, 33, 136–144.

- Ye, Q., Law, R., and Gu, B. (2009), ‘The impact of online user reviews on hotel room sales’, International Journal of Hospitality Management, 28(1), 180-182.

- Zhang Z., Ye Q., Law R. and Ly Y. (2010), ‘The impact of e-word-of-mouth on the online popularity of restaurants : a comparaison of consumer reviews and editor reviews’, International Journal of Hospitality Management, 29, 694-700.

Appendix

Appendix 1 – Correlation matrix by group (TripAdvisor ranking)

Notes

Independent website This, for example, excludes the Chateau de Tours as its website is attached to a page that is accessed via the town hall site. In this case, the measures relating to website browsing experience on the site are not accessible to researchers.

Non-parametric tests are not responsive to extreme values unlike parametric tests (e.g. average and correlation comparison). They therefore make it possible to manage wide distributions that might be expected to arise when comparing the number of visits to a château such as the Château Chambord and those to another less well known tourist attraction.